As a database administrator, I once relied on DELETE to purge large volumes of obsolete records. Over time, I discovered this approach caused hidden performance and storage headaches. In this post, I’ll share what I learned about InnoDB’s storage model, the pitfalls of bulk DELETE, and the best practices I now follow.

Understanding InnoDB’s Storage Architecture

InnoDB stores data in tablespaces, segments, extents (groups of 32 pages), and pages (16 KB each by default). When you delete rows, InnoDB simply marks them as deleted—it doesn’t return space to the operating system. As a result, the .ibd file stays the same size, leaving unused “holes” in your tablespace.

My Experiment: DELETE Doesn’t Free Space

- I created an empty table and saw its

user.ibdfile occupy about 96 KB. - I inserted 100 000 rows; the file grew to ~14 MB.

- I ran

DELETE FROM user LIMIT 50000;and checked again—the file remained ~14 MB, even after deleting half the rows.

This confirmed that InnoDB only flags rows as deleted; it doesn’t shrink the tablespace automatically.

How DELETE Impacts Query Performance

Even after deleting 50% of rows, my SELECT queries scanned the same number of pages and incurred the same I/O costs. The only difference was fewer rows returned. Bulk deletions therefore don’t reduce query scan time or I/O, yet leave your storage fragmented.

Best Practices I Now Follow

- Logical Deletion: I add an

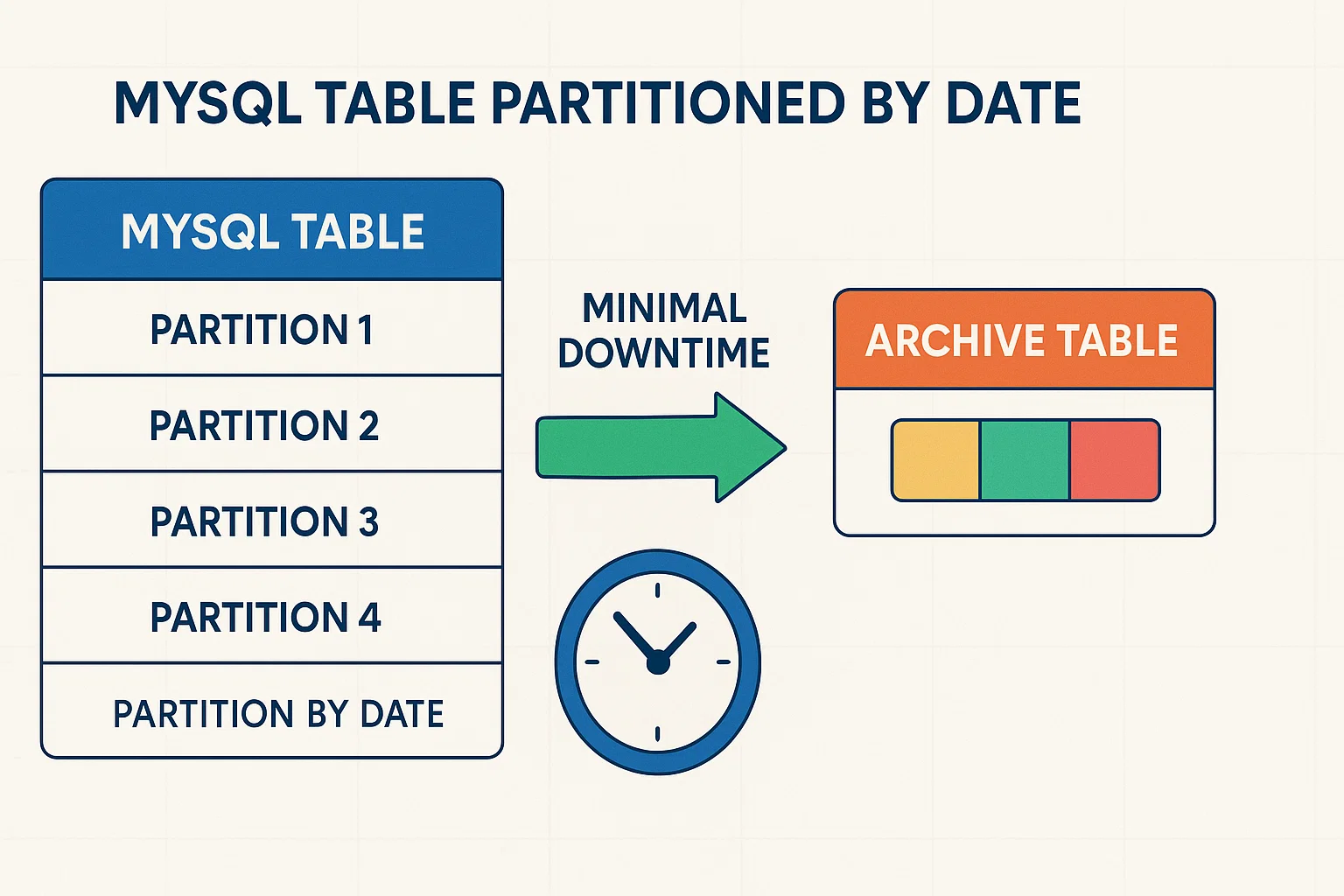

is_deletedflag and update it instead of physically deleting. Queries simply filterWHERE is_deleted = 0, avoiding fragmentation. - Partition Archiving: By partitioning tables on date (or another key) and using

ALTER TABLE … EXCHANGE PARTITION, I can quickly move old partitions to archive tables withoutDELETE. - Permission Controls: I grant only

SELECT, INSERT, UPDATEto application accounts—noDELETE—to prevent accidental bulk removals. - Dedicated Archive Systems: For truly historical data, I offload cold records to ClickHouse or Elasticsearch, using TTL policies to auto-purge old entries.

Conclusion

Through these strategies, I maintain a lean, performant database without the pitfalls of bulk DELETE. If you’re wrestling with large-scale data cleanup, consider logical deletes, partition archiving, and proper permissions to keep your MySQL environment healthy and efficient.

FoxDoo Technology

FoxDoo Technology