AI agents for developers are finally being judged by the only metric that matters—do they help us ship better software, faster, with fewer surprises? I remember a Thursday night last winter when our release candidate started failing in staging. Our tiny agent looked at the logs, grabbed a recent runbook, and suggested rolling back a questionable feature flag. It wasn’t glamorous. It was calm and specific. We still reviewed the plan, but that little helper cut thirty minutes from a sticky incident and let us ship before midnight.

That’s the energy of this piece. No theatrics, just field-tested moves for building agent systems that don’t flake out under pressure. We’ll look at the architecture that keeps you sane, the retrieval that keeps you grounded, the guardrails that keep you safe, and the observability that earns trust. Along the way I’ll share what’s worked in real projects—and a few missteps I wouldn’t repeat. If you’ve been curious about where AI agents for developers actually fit, this guide lays out the map.

Why AI Agents for Developers Matter Now

Two things changed at once. Models got better at following structure, and our tool stacks got easier to expose in safe, typed ways. That combination moved agents from novelty to utility. For everyday engineering, an agent that reads traces, calls a function with strict arguments, and proposes a short, reviewable patch can remove a lot of drag. The result isn’t magic; it’s fewer context switches and more time in the flow state. Teams adopting AI agents for developers aren’t trying to “replace coding”; they’re trying to remove glue work—ticket triage, repetitive commentary, obvious refactors, routine lookups, and checklist-heavy operations.

I’m gonna be honest: agents also force discipline. If your runbooks are stale, your APIs undocumented, or your dashboards scattered across five tools, the agent will surface that pain. Treat that as a feature. The same cleanup that helps your teammates will help your agent do real work without guessing.

Architecture That Stays Boring (On Purpose)

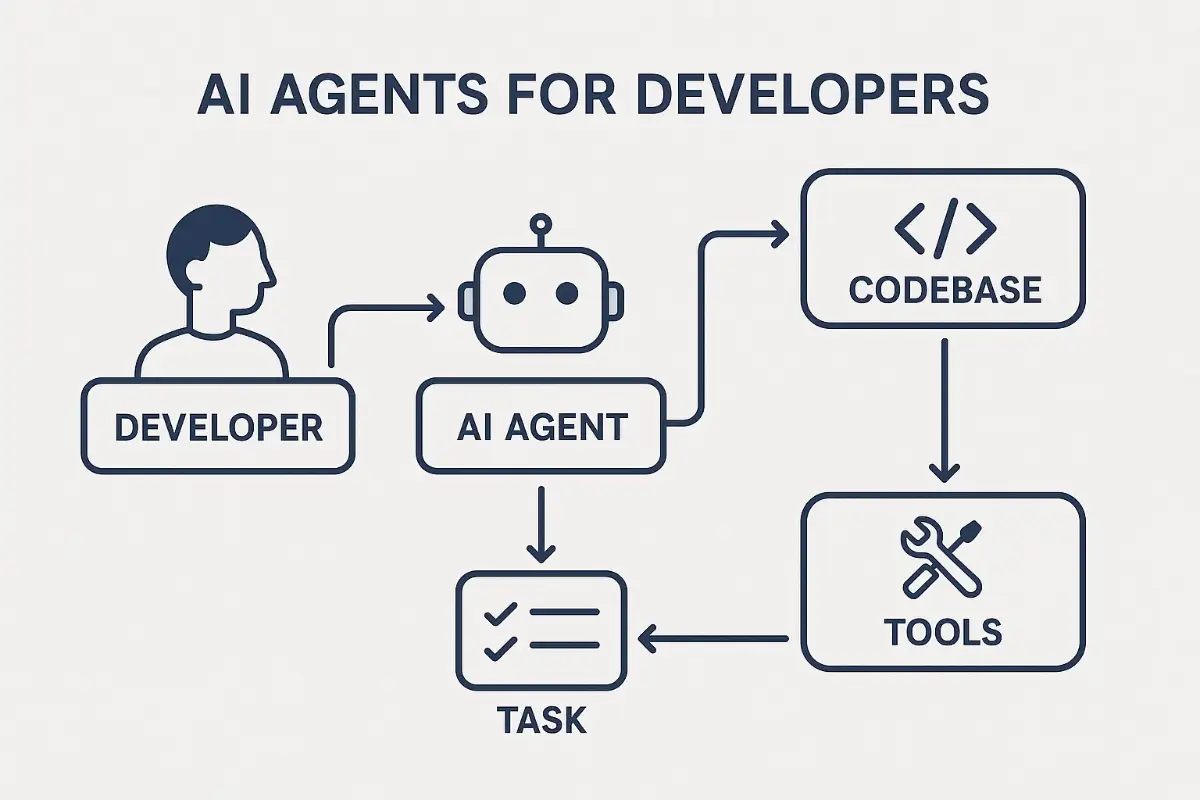

Great agent systems feel a little boring. That’s a compliment. Boring means predictable, observable, and replaceable in parts. Here’s a reference shape I use when I build AI agents for developers in production:

- Planner: decides the next safe action; produces a structured plan.

- Retrieval: fetches fresh, scoped context (logs, docs, symbols, metrics).

- Executor: calls typed tools; validates inputs and outputs.

- Verifier: checks the artifact against a checklist; blocks if risky.

- Artifact store: keeps patches, tickets, summaries, and provenance.

- Trace pipeline: records spans, tokens, costs, and failure modes.

// Pseudo-interface for the loop plan := planner.decide(task, context) for step in plan.steps { if step.action is tool: result := executor.call(step.tool, step.args) // schema-checked context := context + result if step.action is deliverable: ok, issues := verifier.check(step) if ok: return deliverable else: attach issues and escalate } Keep the interfaces honest. The planner outputs JSON with a known schema. The executor only sees validated arguments. The verifier runs on artifacts, not vibes. If something goes sideways, the trace makes it obvious which box misbehaved.

Designing the Tool Layer the Right Way

Tools are where agents stop talking and start doing. Design them like you would any public API. For AI agents for developers the difference between “cool demo” and “daily driver” often lives in four details:

- Typed arguments: enforce schemas and default values; reject surprises.

- Human-readable descriptions: a one-sentence “when to use” saves tokens and mistakes.

- Least privilege: grant the minimum scope; separate read-only and write paths.

- Rate limits & timeouts: each tool owns its own budget to protect the system.

// TypeScript example of a tool registry type DeployCanaryArgs = { service: string; image: string; percent: number; }; type Result<T> = { ok: true; data: T } | { ok: false; error: string }; interface Tool<Args, Out> { name: string; description: string; call: (args: Args) => Promise<Result<Out>>; timeoutMs: number; } const tools = { "deploy_canary": <Tool<DeployCanaryArgs, {url: string}>>{ name: "deploy_canary", description: "Roll out a canary for a service; 0-25% only.", timeoutMs: 120_000, async call(args) { if (args.percent > 25) return { ok:false, error:"percent too high" }; // ... call CI/CD with scoped token ... return { ok:true, data:{ url:"https://canary.example/status" } }; } } } as const; Give each tool a short “contract doc” next to the code: purpose, limits, and example calls. Your future self will thank you when you debug a midnight incident and can see exactly what the agent was allowed to do.

Retrieval That Grounds the Conversation

Your agent is only as good as what it can see. Aim for retrieval that’s narrow and fresh. For code-heavy work, blend symbol-level search (functions, classes, modules) with chunked docs and a small set of “fast facts” like recent deploys and active incidents. The goal is cheap, relevant context that keeps answers current without drowning the planner. In practice, AI agents for developers work best when they read just enough to act, then fetch more only if needed.

- Split indexes: one dense “FAQ” index for quick facts, one sparse index for deeper docs.

- Attach provenance: return a machine-readable list of sources for audit trails.

- Age-aware search: prefer documents touched this week for anything operational.

- Symbols first: jump to the function that failed instead of scanning a whole file.

def retrieve(task: str) -> dict: """Return fresh, scoped context for the planner.""" facts = faq_index.search(task, k=5) # fast, tiny embeddings symbols = symbol_index.lookup(task, k=10) # functions/classes/modules incidents = status_api.recent(hours=6) # hot operational context return {"facts": facts, "symbols": symbols, "incidents": incidents} If you’ve got an older codebase, start small: index only the top five packages and the runbooks that actually get used. You’ll get 80% of the benefit with 20% of the work.

Planning and Decomposition Without the Drama

Plans should fit on one screen. When they don’t, it’s usually because the agent is missing a tool or chasing two goals at once. Keep plans short and decisive. Here’s a minimal structure that has worked well for AI agents for developers:

{ "goal": "Reduce error rate in checkout service", "steps": [ {"action":"read_logs", "args":{"service":"checkout","level":"ERROR","limit":50}}, {"action":"retrieve_runbook", "args":{"topic":"db-timeouts"}}, {"action":"propose_patch", "args":{"path":"retry_policy.ts","pattern":"backoff"}}, {"action":"open_pr", "args":{"title":"Reduce DB timeout flaps (retry tweak)"}} ], "stop_condition":"Verifier approves patch or budget exhausted" } Always include a stop condition. It curbs runaway loops and makes cost predictable. Pair it with a “message budget” so the agent knows when to summarize, escalate, or bail with partial results.

A Verifier That Catches the Obvious

Cheap, focused verification blocks unnecessary pain. Before merging a patch, run a small model or rule set that checks the basics: path exists, diff compiles, tests run, no secrets, no dangerous shell calls. For AI agents for developers this extra pass pays for itself quickly.

def verify_patch(diff: str) -> list[str]: issues = [] if "rm -rf" in diff or "DROP TABLE" in diff: issues.append("dangerous command") if len(diff) > 20000: issues.append("diff too large") if not compiles(diff): issues.append("does not compile") return issues If the verifier flags anything high risk, stop and summarize. A human reviewer can take it from there with clear breadcrumbs.

Observability: Traces You Can Read in a Hurry

Real trust comes from visibility. Instrument the life of a request: plan, retrieval, each tool call, each verifier check, final artifact. Include model name, token counts, latency, cache hits, and costs. Even a simple dashboard tells you where time and money go, and where errors concentrate. When we turned on structured tracing, we discovered that one “innocent” doc tool was eating 40% of the budget. Quick fix, immediate win.

For teams adopting AI agents for developers, it’s worth skimming high-level industry resources so everyone shares vocabulary and expectations. Two good starting points are the GitHub Octoverse and the Stack Overflow Developer Survey 2025—both helpful for context on tool adoption and workflow changes without getting lost in hype.

# Example span names to standardize - agent.plan - agent.retrieve - agent.tool.read_logs - agent.tool.open_pr - agent.verify - agent.finalize Don’t overthink dashboards. A few latency histograms, a tool-call tree, and a small list of the top failure reasons will carry you a long way.

Security: Make the Right Path the Easy Path

Agents repeat what they read, and they act on what you expose. Treat untrusted content as untrusted code. Escape HTML, block system-style tokens in external input, and validate arguments against allowlists. Rotate credentials, and keep separate service identities for each tool. When AI agents for developers push buttons in production, least privilege isn’t optional.

- Run “red-team” prompts in CI that try to exfiltrate secrets or escalate privileges.

- Snapshot risky outputs for audit: what did the agent see; what did it propose; who approved it.

- Publish a one-page policy that defines which tasks can be fully automated and which require approval.

For policy discussions, many teams reference community resources such as the OWASP Top 10 for LLM Applications. It’s a solid checklist for thinking through common risks and mitigations.

Latency, Cost, and Accuracy: Pick Two—Then Earn the Third

Every system has a triangle. The way out is to tune per use case. For triage, keep latency under two seconds and accept a narrower scope. For code suggestions, keep costs down by caching retrieval and using a lighter planner. For anything user-facing, spend extra on verification. In production, AI agents for developers feel fast when the first useful token hits the screen quickly—even if the full plan finishes later.

| Use Case | Latency Goal | Cost Goal | Accuracy Strategy |

|---|---|---|---|

| Incident Triage | < 2s to first suggestion | < $0.01/op | Strict tool list + short plans |

| Code Review Hints | < 8s full result | < $0.03/PR | Verifier checklist + diff lint |

| Runbook Navigation | < 3s | < $0.005/op | FAQ index first, deep index on miss |

Edge and Browser: When to Move Work Closer to the User

Some pieces belong near the user. Reranking a handful of results, cleaning a prompt, or generating a short summary can run on edge functions or even in the browser. WebGPU opens up tiny models for classification, while the heavy planner stays on your backend. That split makes AI agents for developers feel more responsive without breaking your trust model.

// Edge sketch: cheap rerank with a tiny classifier export default async function handler(req) { const { query, candidates } = await req.json(); const features = featurize(query, candidates); // fast, synchronous const scores = tinyModel.predict(features); // runs in < 10 ms return new Response(JSON.stringify(rank(candidates, scores))); } Versioning, Rollouts, and Guarded Autonomy

Agents change behavior with small prompt edits. Treat prompts like code: version them, review them, and release them behind flags. Roll out changes to 5% of traffic and watch traces for regressions. If you allow the agent to perform write actions, tie those actions to a policy with a review threshold. In other words, autonomy is allowed where it’s cheap to undo; everywhere else, default to “ask first.” That’s the happy path for AI agents for developers who need to coexist with cautious teammates.

Evaluation That Fits in CI

Evaluation gets scary when it becomes subjective. Keep it boring and measurable. Freeze a set of “golden tasks” that reflect real tickets: a flaky integration test, a noisy log, a small refactor that adds types, a doc update. For each, define pass/fail criteria that an engineer can check in thirty seconds. Run these in CI like any other test suite. Track three numbers: correctness, latency, and cost. Promote changes that lift correctness by at least ten percent without blowing the budgets.

# Simplified CI step run_agent --scenario flaky_test.json | tee out.json jq '.verified == true' out.json jq '.latency_ms < 5000' out.json jq '.cost_cents < 2' out.json Human-in-the-Loop Done Right

Agents don’t replace teammates; they free them. Make escalation pleasant: when confidence drops or risk rises, the agent posts a short summary with links to the artifact, the trace, and the sources it used. A human can step in quickly because the context is clean. When people enjoy the handoff experience, usage climbs. That’s when AI agents for developers quietly become part of the team’s muscle memory.

A Reference Skeleton You Can Adapt

Here’s a compact skeleton that usually lands well—planner and executor are separate, retrieval is explicit, verification is opinionated, and all outputs are structured.

from dataclasses import dataclass from typing import Any, Dict, List, Literal, Optional, Tuple import json, time ActionName = Literal["read_logs","open_ticket","propose_patch","open_pr"] @dataclass class Action: name: ActionName args: Dict[str, Any] reason: str TOOLS: Dict[str, Any] = {} def tool(name): def wrap(fn): TOOLS[name] = fn; return fn return wrap @tool("read_logs") def read_logs(service: str, level: str = "ERROR", limit: int = 50): return [{"ts":"2025-08-20T10:22:11Z","level":level,"msg":"db timeout"}][:limit] @tool("open_ticket") def open_ticket(title: str, body: str, labels: List[str]) -> str: return "DEV-2042" @tool("propose_patch") def propose_patch(path: str, hint: str) -> str: return f"diff --git a/{path} b/{path}\\n- backoff=1\\n+ backoff=2" @tool("open_pr") def open_pr(title: str, diff: str) -> str: return "https://git.example/pr/42" def llm(prompt: str, schema: Optional[dict]=None) -> str: return "{}" # integrate your model; enforce schema ACTION_SCHEMA = { "type":"object", "properties":{ "name":{"enum":["read_logs","open_ticket","propose_patch","open_pr"]}, "args":{"type":"object"}, "reason":{"type":"string"} }, "required":["name","args","reason"] } def structured(prompt: str, schema: dict) -> dict: data = json.loads(llm(prompt, schema=schema) or "{}") # validate here, raise on failure return data def retrieve(task: str) -> str: return "latest deploy: 14:30Z; incidents: none; feature_flag=db_retry" def plan(task: str, ctx: str) -> dict: return structured(f"Plan next safe step for: {task}\\nContext:\\n{ctx}", ACTION_SCHEMA) def verify(action: Dict[str,Any]) -> Tuple[bool, List[str]]: issues = [] if action["name"] == "open_pr" and "rm -rf" in action["args"].get("diff",""): issues.append("dangerous command") return (len(issues) == 0, issues) def run_agent(task: str) -> Dict[str,Any]: ctx = retrieve(task); transcript = [] for _ in range(8): action = plan(task, ctx); transcript.append(action) fn = TOOLS.get(action["name"]) result = fn(**action["args"]) if fn else {"error":"unknown tool"} ctx += f"\\nRESULT({action['name']}): {result}" if action["name"] in ("open_ticket","open_pr"): ok, issues = verify(action) return {"result": result, "verified": ok, "issues": issues, "steps": transcript} time.sleep(0.1) return {"result": None, "verified": False, "issues": ["step budget exceeded"], "steps": transcript} Swap this into your stack, wire in your real tools, and you’ll have something useful by the end of the day. Keep the prompts short and your schemas strict. That’s how AI agents for developers stay predictable.

From Prototype to Team Habit

Culture matters. The fastest way to make agents part of daily work is to solve one small, annoying problem end-to-end. Pick a workflow that nobody loves but everybody needs—like turning flaky test logs into a Jira ticket with clear reproduction steps. Ship a tiny agent that does only that job and does it well. When it works, people will ask for the next one. This is how AI agents for developers become routine: a steady stack of honest wins, not a sweeping mandate.

My Short List of Common Mistakes

- Letting prompts balloon: long prompts are hard to reason about and easy to break. Keep them short, with examples.

- Too many tools at once: if you need more than eight tools for a task, split the task.

- No guardrails: schemas and allowlists cost nothing compared to a bad write.

- Hiding failures: escalate early with a clean summary; people are faster than retries.

- Skipping cleanup: stale runbooks and unlabeled dashboards confuse humans and agents alike.

Team Workflow and Developer Experience

Your developer experience is the agent’s experience. Clear logs, clean interfaces, and sane defaults benefit both. If you’re building UI around an agent (review widgets, triage panels), lean into components that feel familiar to your team. A handful of small decisions—like using a shared design system and consistent button copy—go a long way.

Speaking of front-end polish and practical dev speed, two detailed pieces from our Dev section pair well with this guide:

Tailwind CSS Custom Styles — pragmatic patterns for keeping design systems tidy as your app grows.

Mac Development Environment Setup 2025 Recommendations — a battle-tested setup that gets new machines shipping in a single evening.

Round-Trip Example: From Alert to Pull Request

Here’s a concrete, end-to-end example I ran last month. We got a spike of 504s on a service that talks to our database. The agent:

- Read the relevant logs (p95 timeouts trending up).

- Pulled a runbook page about transient DB slowness after a schema change.

- Proposed a diff to tweak retry backoff on a single call site.

- Opened a PR with a crisp summary and links to the trace and dashboards.

- Flagged the PR as “requires approval” because the change touched prod code paths.

We reviewed, added one comment about telemetry, and merged. End-to-end, it was under an hour, and the agent handled the messy bits we usually procrastinate. This is the kind of win AI agents for developers can make repeatable.

Docs and Runbooks the Agent Can Actually Use

Write runbooks like you’re writing for a colleague who’s smart but new. Short steps, clear prerequisites, and explicit “if this, then that.” Avoid screenshots for anything that changes weekly. For docs that the agent will read, put key parameters in tables and label them the same way your tools expect them. Consistency beats cleverness.

Incident Response for Agent Systems

Treat agent issues like service issues. Define severity levels and playbooks. If an agent makes a bad call on a deploy, freeze the relevant tools, roll back the prompt change, and flip the system to read-only while you investigate. Keep a small incident template ready so you can write once and return to the work quickly. The important thing is to make recovery certain and cheap. That’s how AI agents for developers earn long-term trust.

Resources Worth a Bookmark

If you want broader context or leadership-level framing around what’s changing for engineering teams, these public resources make for good, neutral reading:

- GitHub Octoverse for ecosystem trends and real-world shifts in tooling and languages.

- Stack Overflow Developer Survey 2025 for a snapshot of how developers say they’re using AI tools today.

- Microsoft Build 2025: The age of AI agents for the platform-level narrative many teams are aligning with.

FAQs I Hear a Lot

What model should I start with? Start with something fast for planning and something slightly stronger for verification. Add larger models only when your evaluation set shows a clear win.

How many tools is too many? If you’re exposing more than eight for a single use case, you probably have two use cases mashed together.

Can I run part of this locally? Absolutely. Cache embeddings, run small rerankers at the edge or in the browser, and keep the planner in your trusted backend.

Do I need multi-agent orchestration? Only if you see clear boundaries—like a researcher that gathers context and an executor that applies changes. Start with one agent and a short plan.

Closing Thoughts

Good tools get out of your way. Done right, AI agents for developers feel like a quiet teammate who understands your codebase, knows your runbooks, and brings you a fix instead of a paragraph. Keep the loop small, the contracts strict, and the traces legible. Build trust with small, repeatable wins. If that sounds almost boring, that’s exactly the point.

FoxDoo Technology

FoxDoo Technology