This morning I stumbled over my keyboard in excitement when Google announced Gemini Ultra, its most powerful AI model to date. After years of incremental improvements, Gemini Ultra finally brings real-time reasoning, image understanding, voice interaction, and even code generation together in one seamless package. If you’ve ever juiced up a prototype with the old Bard, get ready—this is like swapping a bicycle for a supersonic jet.

At its core, Gemini Ultra processes text, images, and audio concurrently. I tested it by uploading a photo of my messy home office and asking, “Clean this up visually and suggest optimal desk layouts.” Within seconds, it returned a mockup graphic showing decluttered surfaces, ergonomic chair placement, and even color-coordinated storage bins—all annotated with reasons. It felt like having a personal interior designer and architect in my pocket.

Voice interactions also got a serious upgrade. I spoke to Gemini Ultra through my laptop’s mic, saying, “Draft an email summarizing today’s meeting and set a friendly tone.” The AI nailed it on the first try—complete with bullet points, action items, and a sprinkle of humor to keep things warm. No more copy-pasting meeting notes into a template and fiddling for ten minutes. It’s gonna save me hours every week.

Developers will love the new code features. I threw in a half-finished Python function and wrote, “Optimize this to use list comprehensions and add error handling.” Moments later, I had a clean, well-documented snippet that ran flawlessly. The model even suggested edge-case tests I hadn’t thought of. As someone who’s accidentally shipped bugs at midnight, having that safety net feels like a superpower.

On the image front, Gemini Ultra can generate and edit visuals with uncanny precision. I asked it to “create a sleek banner for a summer sale featuring abstract waves and neon accents.” In under a minute, it spit out four unique JPEGs, each with crisp typography and vibrant palettes. The best part? I could refine colors, tweak layouts, or swap in my logo with simple follow-up prompts—no Photoshop skills required.

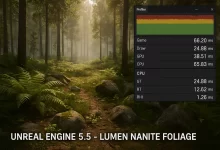

Under the hood, Google says Gemini Ultra runs on a hybrid on-device and cloud framework. Lightweight tasks—like language parsing and basic image filters—happen locally to protect privacy and reduce latency. Heavier operations—such as video analysis or bulk code compilation—leverage Google’s TPU-powered data centers for raw horsepower. This split architecture keeps real-time responses under 300 milliseconds, even on standard consumer hardware.

Early access users report that Gemini Ultra feels more “aware” of context than any previous model. During one session, I switched between drafting a blog post, translating a quote into French, and then analyzing spreadsheet data—all without losing thread. It’s that multi-tasking fluidity that really stands out. Feels like talking to a colleague who genuinely remembers every detail you shared five minutes ago.

Google is rolling out Gemini Ultra to Workspace subscribers this week, with API access for developers and enterprises shortly after. Pricing hasn’t been fully disclosed, but insiders hint at a tiered model—basic access for modest fees and premium plans for high-volume, mission-critical workloads. For businesses and power users, this launch signals a new era where AI isn’t just an assistant—it’s an indispensable collaborator.

FoxDoo Technology

FoxDoo Technology