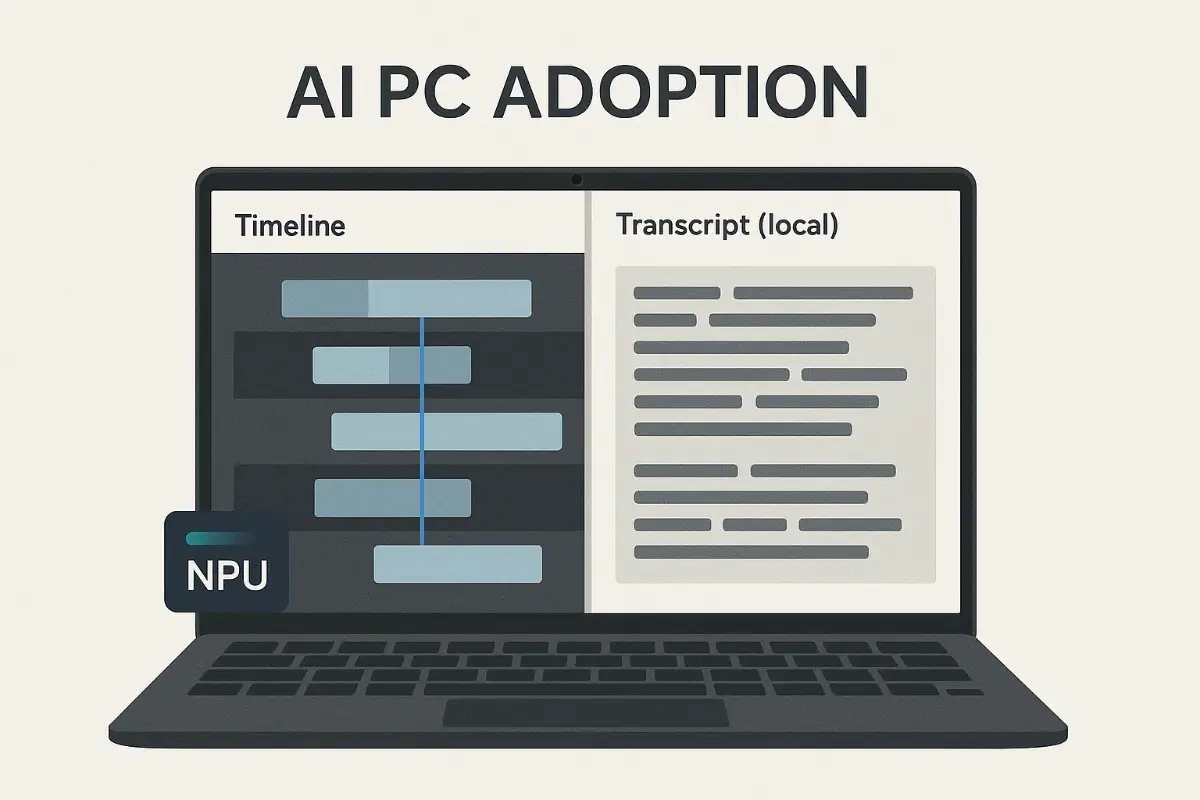

I’ve been skeptical of buzzwords, but this morning’s rollout pushed me over the line: AI PC adoption finally feels real, not theoretical. You can see it on store shelves—more laptops and minis shipping with dedicated NPUs—and you can feel it in everyday chores that used to chew time: trimming a video, cleaning up audio, searching your own notes without cloud lag. It’s the kind of shift you notice on a Tuesday afternoon when a task that took eight minutes now takes two.

What flipped the switch

Three things landed together. First, the newest Windows and macOS updates unlocked broader on-device model support—summaries, translations, and voice cleanup running locally, not round-tripping to a data center. Second, a critical mass of hardware is finally in the wild: Snapdragon X-class machines, Intel’s latest Lunar Lake designs, and Ryzen AI systems all pushing NPUs past “demo” speeds. Third, the big app vendors quietly shipped native hooks, so the smart stuff lives inside the tools you already use instead of a separate toy app.

What you’ll actually do faster

- Meetings to minutes: Local transcription that doesn’t buckle on spotty Wi-Fi, plus one-click action items you can paste into your task manager.

- Video cleanup: Background noise removal and scene cuts while your GPU handles playback—no fan roar, no beachballs.

- Search that remembers: Type “the pricing slide from last week’s deck” and your PC surfaces the right thumbnail in seconds, not after a cloud crawl.

- Coding chores: Scaffolding boilerplate and writing unit tests against your local repo context without shipping the whole codebase to an external service.

Battery life and the “quiet speed” effect

What surprised me most wasn’t raw performance—it was quiet speed. On an NPU-equipped laptop, I exported a short 1080p clip with denoising and auto-captions while writing an email. The fans never spun up, and the battery barely moved. That’s the point of dedicated silicon: shove the math into a low-power lane and leave your CPU/GPU for the rest of your day.

Where the gains are biggest (and where they aren’t)

If your work is a mosaic of small tasks—email, notes, clips, screenshots—AI PC adoption pays dividends immediately. For massive model training, the cloud still wins. But for drafting, editing, summarizing, and tidying, the local loop is faster, cheaper, and more private. I no longer think “Do I have a signal?” before I hit summarize; I just do it.

Privacy and control without heroics

Running models locally means your messy draft, your legal brief, or that awkward meeting audio stays where it started. The newer control panels show what’s processed on-device and what goes out, with per-app toggles. I keep cloud features on for team projects and lock them down for anything under NDA. No drama, no new login to remember.

How to tell if your next PC is actually “AI-ready”

- NPU throughput: Look for a modern NPU spec (measured in TOPS) paired with current drivers. The number matters, but so does software support.

- Memory headroom: 16 GB is workable; 32 GB makes multi-app workflows breathe when you’re crunching video and notes together.

- Thermals: Thin is nice; sustained performance is nicer. A good vapor chamber beats a spec sheet brag.

- App ecosystem: Check that your editor, video tool, and note app list on-device features—not just “AI” badges.

A one-hour setup that pays back immediately

- Update OS & drivers: Enable the on-device model pack and NPU runtime in settings.

- Wire your apps: Turn on local transcription in your meeting tool, local search in your notes, and NPU mode in your editor or NLE.

- Create two automations: “Record → summarize → task list” for meetings, and “Screenshot → OCR → file to project” for research.

- Measure one week: Track minutes saved on three repeat chores. If nothing moves, tweak prompts or switch the app integration.

What this means for teams

Rollouts used to stall on cost and privacy. With AI PC adoption humming, pilots get simpler: buy two AI-ready machines per squad, turn on local features, and measure cycle time instead of arguing philosophy. Security is happier (fewer uploads), finance sees fewer surprise API bills, and users get speed without changing their tools. It’s not a revolution; it’s an accumulation of little frictions removed.

A quick personal anecdote

Last week I rewrote a press note between gates at SFO. The Wi-Fi kept dropping, but my laptop’s on-device rewrite and tone adjuster didn’t care. I boarded with a clean draft, action items from the last call already in my task list, and a quiet battery icon. That’s the moment I stopped eye-rolling the marketing slides and started enjoying the minutes I got back.

Bottom line: AI PC adoption isn’t about posters in store windows—it’s about the day your laptop quietly helps you finish early. Today felt like that day.

FoxDoo Technology

FoxDoo Technology