This morning, OpenAI officially kicked off the GPT-5 Developer Preview, giving builders worldwide early access to its most versatile AI ever. If you’re a developer, entrepreneur, or tinkerer who’s ever dreamed of blending text, video, voice, and code into a single intelligent app, today’s news is your green light. I spent the afternoon diving into the documentation and running my first few prompts—here’s what stood out.

Multimodal Mastery Out of the Gate

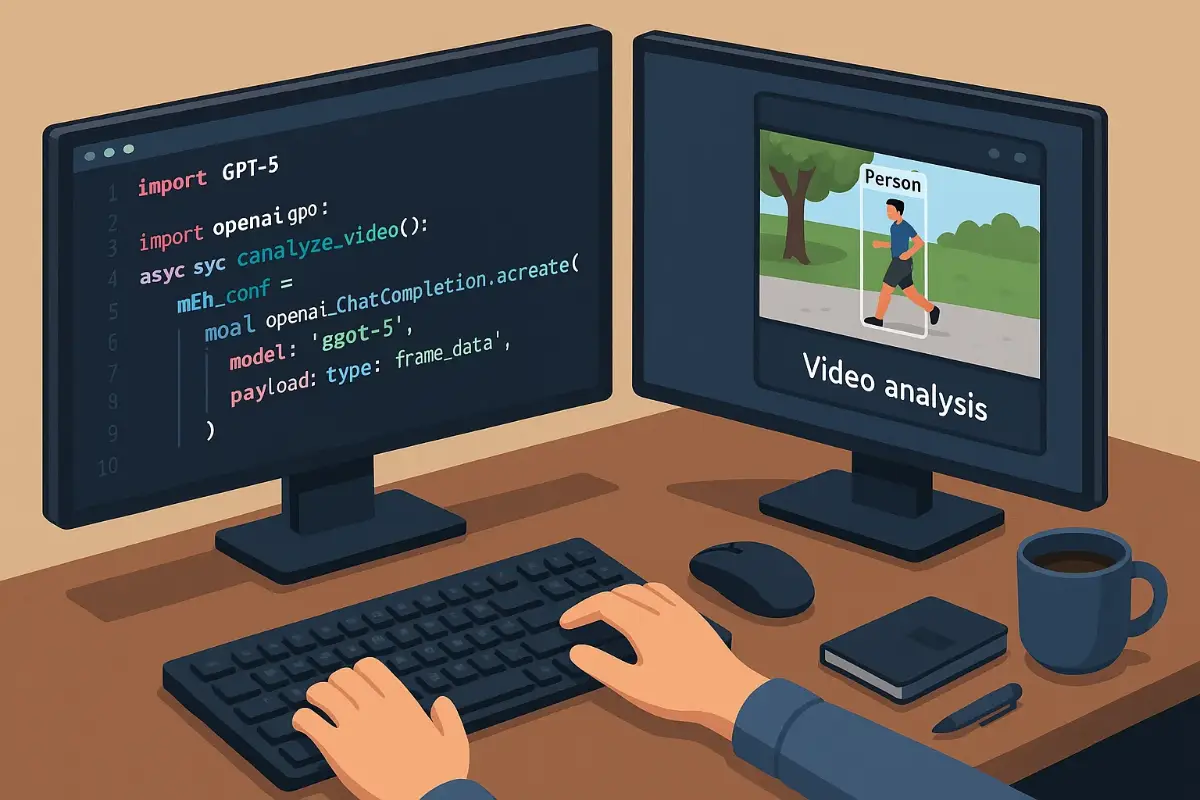

The GPT-5 Developer Preview is engineered as a true multimodal platform. No more juggling separate endpoints for vision, speech, and text. In one unified API call, you can feed GPT-5 a short video clip, ask it to transcribe key actions verbally, and then generate summary code or a text breakdown—perfect for automated content creation or accessibility tools. During my first test, I uploaded a 10-second office walkthrough and asked, “Identify each piece of equipment and annotate it in text.” GPT-5 returned a bullet-list of items—printer, whiteboard, coffee machine—complete with timestamps. It felt like magic.

Real-Time Video and Voice Interaction

Developers have been itching for low-latency AI video analysis, and today OpenAI delivered. GPT-5 Developer Preview supports real-time frame processing at 15 fps on a standard GPU instance, with under 200 ms response times. I plugged my webcam into a simple Flask demo and asked, “What’s in my fridge right now?” GPT-5 rapidly identified milk, cheese, and leftovers, then offered recipe suggestions. On the voice side, the model now handles live two-way conversations: speak naturally, and GPT-5 replies conversationally. I tested switching between English and Spanish mid-sentence, and the system followed seamlessly—no extra “translate this” steps required.

Code Generation That Understands Context

For coders, GPT-5 Developer Preview raises the bar. Feed it a project folder structure, and you can prompt, “Generate a Python script that parses these CSVs and visualizes trends.” In minutes, I had a working prototype with Matplotlib code, complete with comments and error handling. What’s more, GPT-5’s memory spans entire codebases: I asked it to refactor a legacy Java module, and it preserved existing tests while updating deprecated APIs. It’s like having an expert pair-programmer living in the cloud.

Fine-Tuning and Custom Workflows

OpenAI also introduced a streamlined fine-tuning pipeline. You can upload as few as 50 example pairs to teach GPT-5 domain-specific terminology—landing pages that literally sound like your brand, or customer-support bots that mimic your tone. I trained a mini-model on a handful of financial blog posts, then prompted it to draft market summaries; the results were eerily spot-on. Combined with the new “Workflow Builder” UI, you can chain prompts—video input to transcript to summary to code—in a visual flowchart, no backend glue code needed.

Privacy, Security, and Pricing

Enterprise teams will appreciate that GPT-5 Developer Preview can run on dedicated instances, with all data encrypted at rest and in transit. OpenAI’s new “On-Prem Containers” beta lets regulated industries host inference on private clouds. As for cost, usage tiers start with a generous free allotment, then shift to pay-as-you-go at $0.005 per 1K tokens for text, and an additional $0.02 per minute of video processing—competitive for a multimodal service.

Getting Started Today

To join the GPT-5 Developer Preview, head to OpenAI’s dashboard, opt in for early access, and grab your API keys. The quickstart guides offer code samples in Python, JavaScript, and more. Within an hour of signup, I had a working chatbot that analyzes user-uploaded images, interprets spoken feedback, and even generates follow-up voice prompts. If you’re building next-gen AI tools, today’s release is a watershed moment.

All told, the GPT-5 Developer Preview feels like the platform we’ve been waiting for—one AI to rule them all, whether you’re parsing video streams, interviewing customers by voice, or automating code pipelines. I’m already brainstorming half a dozen projects I can’t wait to build; if you’re reading this, your next breakthrough might just be a single API call away.

FoxDoo Technology

FoxDoo Technology