Look, I’m gonna be straight with you—I love the cloud, but the bills hit harder than west-coast gas prices. That’s why I dove head-first into Ollama Tutorial territory last winter. Picture me huddled in a Vancouver coffee shop, snow outside, fan noise inside, watching a 70 B model answer questions locally while my hotspot slept. Pure magic. Ready for that feeling? Let’s roll.

1. Why Ollama Beats Cloud-Only AI

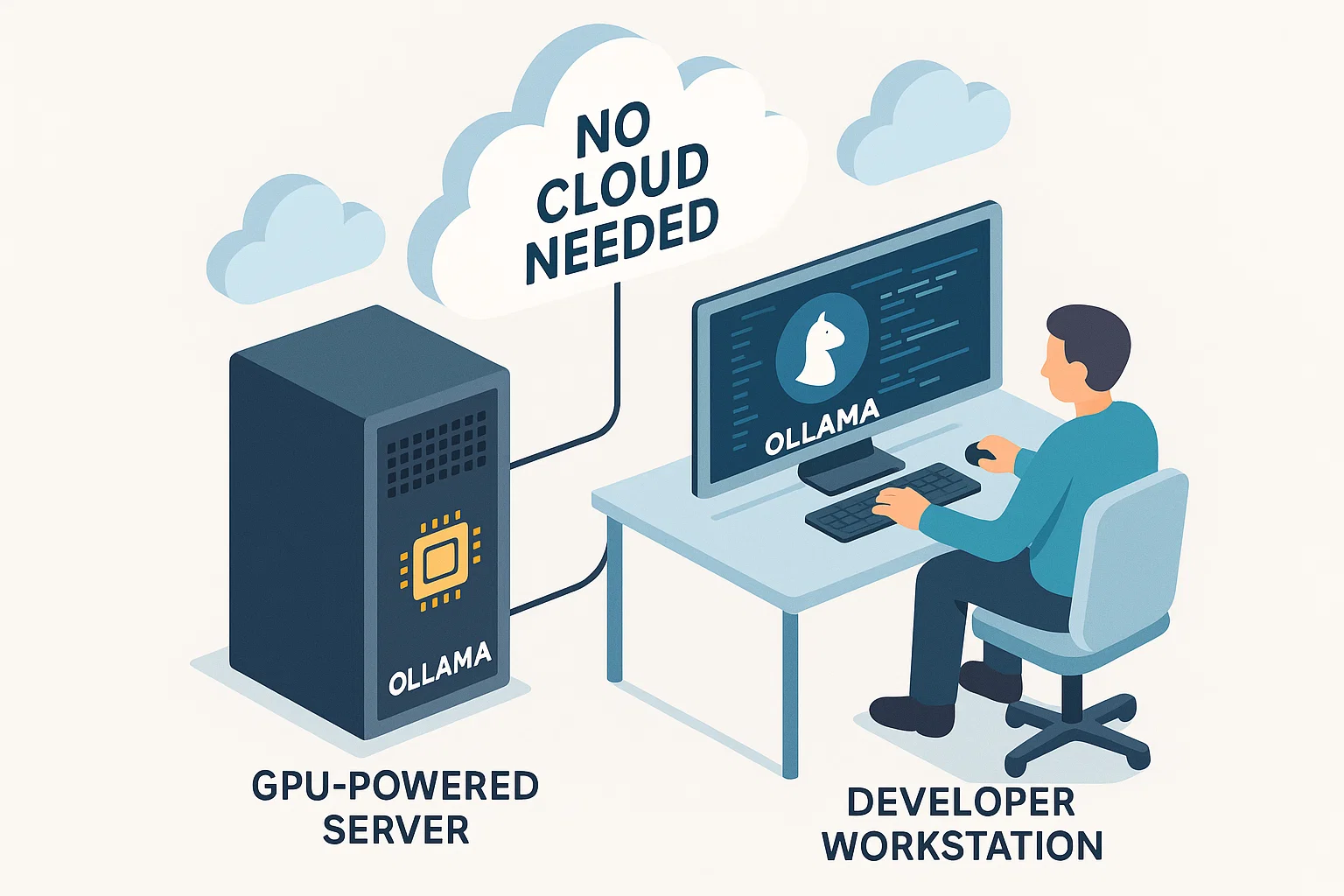

Before we geek out, a quick pulse check on why this framework matters:

- Latency ≈ 0 ms* (okay, more like 30 ms) — responses appear before your finger leaves the Enter key.

- No API-metered costs. Run infinite tokens without sweating your OpenAI bill.

- Privacy by default. Your data never exits your metal.

- GPU optional. CPU-only boxes can still crunch with smart quantization.

2. 5-Minute Quick-Start Checklist

Need instant gratification? Fire up these commands while the espresso’s still hot. (They assume a clean machine and zero patience.)

# macOS (Apple Silicon & Intel)

curl -fsSL https://ollama.com/install.sh | sh

ollama run llama3

# Windows 11/10

Invoke-WebRequest https://ollama.com/download/OllamaSetup.exe -OutFile OllamaSetup.exe

Start-Process .\OllamaSetup.exe

# Ubuntu 22.04

sudo apt update && sudo apt install curl -y

curl -fsSL https://ollama.com/install.sh | sh

When the little alpaca greets you, you’re in!

3. Full Installation Guide (The Deep Dive)

3.1 Desktop OS Packages

Windows users grab the signed installer; it drops system services and a tidy Start-menu entry. Over in macOS land, the Darwin binary plugs into Spotlight so you can run models from a global hotkey. Linux kids get a shell script that builds from verified checksums—no shady PPAs.

3.2 Docker for Infinite Flexibility

Running on a shared server? Docker isolates dependencies and ships a tidy volume that caches all downloaded weights.

# CPU-only container

docker run -d --name ollama \

-v ollama:/root/.ollama \

-p 11434:11434 \

ollama/ollama

# NVIDIA GPU (CUDA 12+)

docker run -d --gpus all --name ollama-gpu \

-v ollama:/root/.ollama \

-p 11434:11434 \

ollama/ollama

# AMD ROCm riders

docker run -d --device /dev/kfd --device /dev/dri \

-v ollama:/root/.ollama \

-p 11434:11434 \

ollama/ollama:rocm

3.3 One-Command Model Fetch

The first model is a single line away:

ollama run gemma:2bBehind the scenes Ollama streams a GGUF weight file, verifies a SHA-256, then triggers automatic decompression. Time to sip.

4. Model Management & Quantization

4.1 Core Commands

| Command | Purpose |

|---|---|

ollama pull llama3 |

Download a model |

ollama list |

Show local models |

ollama run llama3 |

Start interactive chat |

ollama stop llama3 |

Unload from RAM |

ollama rm llama3 |

Delete from disk |

4.2 Importing Custom GGUF or Safetensors

Create a file called Modelfile in the same folder:

FROM ./qwen3-32b.Q4_0.gguf

# optional quantization at build

PARAMETER quantize q4_K_M

Then build it:

ollama create my-qwen -f ModelfileWant to layer a LoRA adapter saved in Safetensors? Just add:

ADAPTER ./my-sentiment-lora4.3 Choosing the Right Quant

q8_0— maximum accuracy; needs beefy VRAM.q4_K_M— sweet spot for 8 GB GPUs; minor perplexity loss.q2_K— emergency mode for Raspberry Pi fanatics.

Remember, every quantized layer slashes memory and speeds up inference. Benchmark, don’t guess.

5. Custom Prompts & Modelfiles

This Ollama Tutorial section is where the fun begins. You can hard-wire a system persona, default temperature, or stop tokens directly inside the model.

FROM llama3

SYSTEM "You are Gordon Freeman. Respond in silent protagonist style."

PARAMETER temperature 0.2

PARAMETER num_predict 4000

Save, build, run, and you’ve got a Half-Life role-play bot that never breaks character. Breen would be proud.

6. REST API Essentials

Spin up curl or Postman—Ollama’s HTTP interface sits on port 11434.

# Streaming chat completion

curl http://localhost:11434/api/chat -d '{

"model":"llama3",

"messages":[{ "role":"user", "content":"Explain TCP in haiku" }],

"stream":true

}'

Need embeddings? Swap the route to /api/embeddings. Fancy SSE streams? Append Accept: text/event-stream. The API is lightweight enough to run on a smartwatch.

7. Pro Environment Tweaks

Crack open your .bashrc (or Service file) and add:

# Keep models hot for one full day

export OLLAMA_KEEP_ALIVE=24h

# Expose to LAN but not the internet

export OLLAMA_HOST=0.0.0.0

# Restrict origins for your front-end

export OLLAMA_ORIGINS=https://mydashboard.local

A quick systemctl restart ollama and you’re good. Runner-tip: setting OLLAMA_MAX_LOADED_MODELS=2 lets you hot-swap between a chat model and an embedding model without constant reloads.

8. Locking Down Your Port 11434

By default Ollama ships without authentication. Two ways to sleep at night:

- Reverse-proxy through NGINX with

auth_requestheaders. - Tunnel via Tailscale and remove public exposure entirely.

Bonus: drop an iptables rule that accepts 11434 only from your workstation’s IP.

9. Real-World Workflows

Here’s where the alpaca really earns its hay:

- Design Pipeline. I pair Ollama’s

llavavision model to summarize Figma boards, then auto-draft copy. - Code Reviews. A Git pre-commit hook pipes diffs through

ollama run code-review-botand blocks sloppy fixes. - Self-hosted Chat. Combine Ollama with MCP Servers to let Slack users chat with on-prem LLMs.

- Edge AI. Deploy compressed 4 B weights onto Android devices following our Edge AI guide.

10. Troubleshooting & FAQ

Model eats 16 GB RAM, help!

Switch to q4_K_M quantization or offload KV cache to GPU with the --gpu-layers flag.

Responses feel slow on an M2 Air.

Set num_ctx to 2048, keep temperature low, and prefer 7 B weights.

How do I upgrade safely?

Stop the daemon, run the installer again, then ollama list. All weights stay intact.

11. Wrap-Up

That wraps up our massive Ollama Tutorial deep dive. We covered installs, GPUs, quantization, Modelfiles, REST tricks, and security—17 techniques to own local LLMs like a pro. Bookmark this guide, share it with your team, and keep experimenting. Next time someone brags about 300-token latency on the cloud, fire up your local llama and smile when the answer appears instantly.

Until next time—keep hacking, keep learning, and may your prompts be ever coherent.

FoxDoo Technology

FoxDoo Technology