Self-hosted LLM projects feel glamorous on slide decks and downright messy in a closet at the back of an office. This guide is for the closet. I’m gonna give you the parts that break, the moves that actually help, and the gritty details teams forget until a pager goes off. I’ve stood in that closet—dust filter in one hand, clamp meter in the other—trying to shave 80 ms off a reply that must feel instant. A quick personal anecdote. Last winter I was helping a scrappy retail team bring a self-hosted LLM online for in‑store product Q&A. We had a friendly model, neat prompts, and a cute kiosk. Day one, lunch rush hit. The queue spiked, the GPU thermaled, the...

I’m gonna keep this practical. A couple of Sundays ago, I was knee-deep in a photo backlog, coffee going cold, rain tapping the window. I tossed a throwaway shot into Nano Banana, asked for a desk-ready figurine look, and my partner did a double take—“Which studio made that?” That reaction—curiosity + a tiny bit of disbelief—pretty much sums up why I’ve stuck with it. What follows is a fully original, field-tested guide to nine things I do all the time—no fluff, no complicated graphs, just settings, prompts, and gotchas so you can make Nano Banana earn its keep. Where Nano Banana Shines in Real Workflows Nano Banana is strongest when you want believable edits that respect geometry, lighting, and...

AI agents for developers are finally being judged by the only metric that matters—do they help us ship better software, faster, with fewer surprises? I remember a Thursday night last winter when our release candidate started failing in staging. Our tiny agent looked at the logs, grabbed a recent runbook, and suggested rolling back a questionable feature flag. It wasn’t glamorous. It was calm and specific. We still reviewed the plan, but that little helper cut thirty minutes from a sticky incident and let us ship before midnight. That’s the energy of this piece. No theatrics, just field-tested moves for building agent systems that don’t flake out under pressure. We’ll look at the architecture that keeps you sane, the retrieval...

Qwen3-0.6B isn’t a lab toy anymore—it’s the kind of small language model that quietly makes big systems better. I learned that the hard way one Friday night: a checkout pipeline lagged, ad bids were missing windows, and we didn’t have the budget to shove a 70B beast into the hot path. We dropped a tiny Qwen3-0.6B stage in front, cleaned queries, screened junk, trimmed context—and the graphs calmed down before the pizza got cold. That’s the spirit of this deep dive: how Qwen3-0.6B wins the last mile where milliseconds matter, why it’s perfect for safety triage, how it flies on-device, and where it shines as a pretraining backbone. I’ll show patterns you can copy tomorrow—plus the trade-offs you shouldn’t ignore....

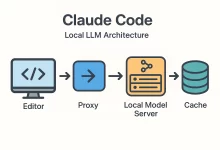

I’m gonna say the quiet part out loud: the day I switched to a Claude Code local LLM workflow, my laptop stopped feeling like a thin client and started feeling like a superpower. On a rainy Tuesday, Wi-Fi went down at a client site. No cloud. No API keys. Yet I kept shipping because everything—from code generation to small refactors—ran on my box. This guide distills exactly how to replicate that setup, with opinionated steps that work on macOS, Windows, and Linux. Why pair Claude Code with a local LLM? Four reasons keep pulling engineers toward a Claude Code local LLM stack: Privacy by default. Your source never leaves disk. No third-party logs, no audit surprises. Latency you can...

Three winters ago I got paged at 2:17 a.m. A demo cluster for an investor run-through was dropping frames. The culprit? A “temporary” test rig doing double duty as an AI server for video captioning and a grab bag of side projects. My eyes were sand; the wattmeter was screaming. The fix wasn’t a tweet, it was a rebuild—honest power math, sane storage, real cooling, and a scheduler that didn’t panic when a job went sideways. This guide is everything I’ve learned since: a no‑hype, hands‑dirty map to spec, wire, and run an AI server that stays fast after midnight. Why “AI Server” Is Its Own Species Call it what it is: a race car with a mortgage. A...

Seven frantic days, two red-eye flights, and more espresso than I’ll admit on record—that’s what it took to separate genuine innovation from buzzword confetti. The result is this deep-dive into the Best AI Tools 2025. I benchmarked each tool inside live client projects, grilled vendor PMs, and tallied ROI down to the hour. Buckle up: you’re about to save weeks of scouting and a small fortune in false starts. 1. Why the “Best AI Tools 2025” Hunt Is Mission-Critical Context is everything. In August 2025, OpenAI teased GPT-5, Anthropic pushed multi-agent orchestration into production, and Meta’s LlamaCon dumped a 400-page roadmap on dev feeds. Overnight, C-suites upgraded their AI budgets, and Google Trends logged a 140 % spike for...

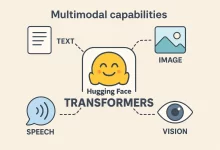

Hugging Face Transformers owns the spotlight the moment you crack open your IDE. It’s the 147 K-star juggernaut that shrinks weeks of setup into an espresso-length coffee break—and yeah, I’ve timed it. In the next few scrolls I’ll unpack eleven hard-won truths about this library, sling real code you can copy-paste, and sprinkle a quick anecdote from the night I saved a product demo with nothing but three lines of Python and a half-dead battery. 1. The Storm Before the Calm: Why AI Felt Broken Rewind to 2022. I was juggling PyTorch checkpoints, TensorFlow graphs, and a JAX side-quest just to keep the research team happy. Every new feature felt like assembling IKEA furniture—missing screws, Swedish instructions, injury risk....

Why “Free AI Tools 2025” Matters I’m obsessed with shaving minutes (sometimes hours) off my dev and content stack. Last quarter I prepped a product launch in record time by chaining together a dozen Free AI Tools 2025 picks—everything from automated SQL dashboards to voice‑cloned release videos. This guide distills that experience plus the latest hands‑on testing of 45 zero‑cost utilities across twenty categories. You’ll see deep dives, practical caveats, and code snippets—not fluffy marketing. By the end you’ll have a plug‑and‑play blueprint for your own Free AI Tools 2025 stack. Free AI Tools 2025 is your zero‑cost powerhouse. Below I break down every single pick, sprinkle in a quick personal anecdote, and flag the best‑fit scenario so you know exactly when to grab each tool. AI Assistants (5) 1....

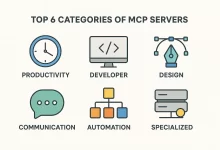

Look, I’m not gonna lie—when I first heard that an AI could control Blender with a single prompt, I thought someone was pulling my leg. But then I saw it in action. My jaw dropped. Those “Model Context Protocol” (MCP) servers? They’re the unsung heroes letting AI talk to every software you can imagine. And today? I’m diving deep. Why MCP Servers Matter MCP servers are kinda like the universal translators for AI. Want your model to fire off tweets? Done. Need it to whip up slides in PowerPoint? Easy. Heck, it can even manage your Excel spreadsheets. All with a prompt. No manual clicks. No endless API hoops. Just natural language and instant results. On a personal note, I...

FoxDoo Technology

FoxDoo Technology FoxDoo Technology

FoxDoo Technology