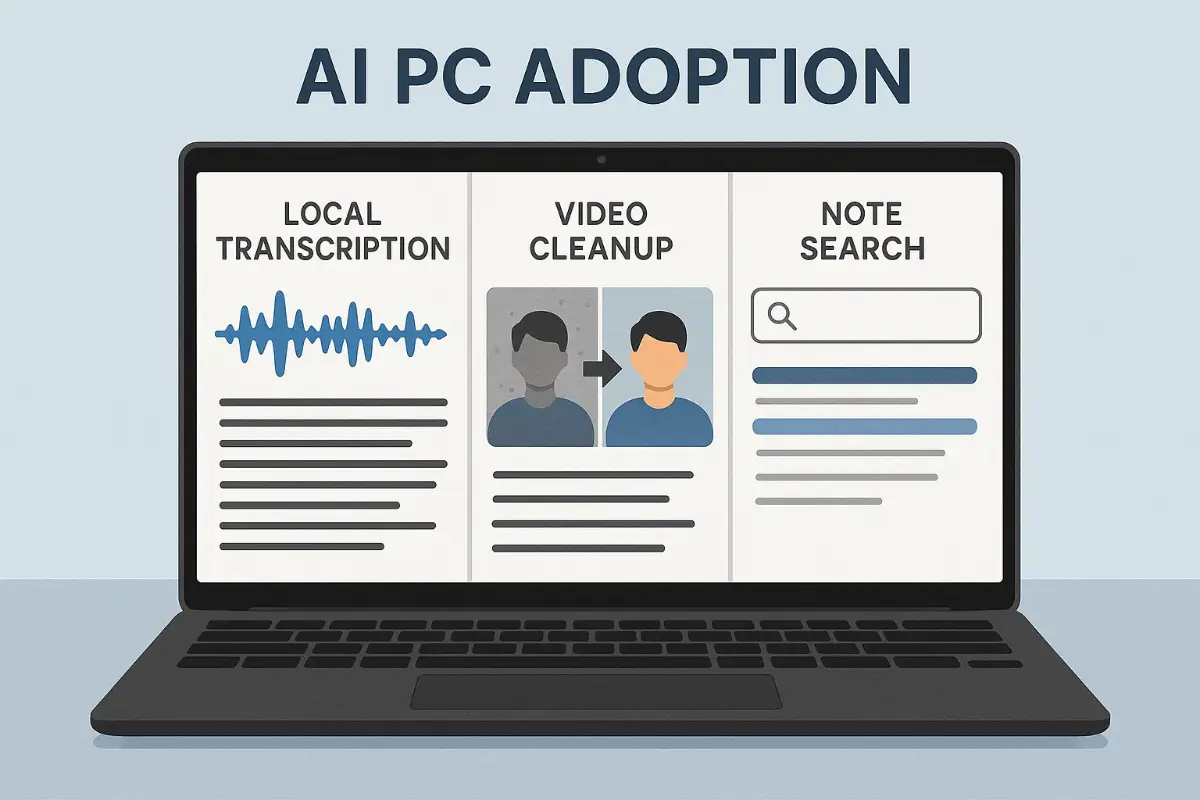

The biggest change you’ll notice this week isn’t a new app; it’s how your laptop behaves. With AI PC adoption finally hitting critical mass, everyday chores—meeting notes, quick video fixes, audio cleanup, document search—snap into place with a speed and quiet confidence that used to require a fat cloud connection and a noisy fan ramp. It’s the sort of upgrade you feel on a random Tuesday when a task that used to take eight minutes takes two.

What actually changed today

- NPUs everywhere: New consumer laptops and minis ship with neural processing units designed for low-power inference. The result is local AI that doesn’t hijack your CPU/GPU or your battery.

- System-level features: Current Windows and macOS releases expose on-device summarization, transcription, translation, and image understanding straight from the Share/Services menus.

- Native hooks in real apps: Editors, note tools, and NLEs now call local models directly, so you use the tools you know instead of juggling sidecar widgets.

Real-world gains you’ll feel this week

- Meetings → minutes: Local transcription that survives bad Wi-Fi; auto-extracted action items you can paste into your task manager.

- Video polish on the couch: Noise removal, scene detection, and caption drafts while the fans stay whisper-quiet.

- Your stuff, instantly searchable: Type “that pricing slide from the Q3 deck” and your machine pulls the thumbnail from local vectors without calling home.

- Repo-aware coding chores: Boilerplate scaffolds and unit tests generated against your local code—no full repo upload required.

Why this is different from last year’s demos

Last year, “AI on your PC” meant a pretty demo and a hot chassis. With broad AI PC adoption, the workload runs in a power-sipping lane while your CPU and GPU stay free. The experience is “quiet speed”: you export a 1080p clip with denoising and auto-captions and still browse without lag. Small jobs feel instant; big jobs feel possible without a wall socket.

Privacy and control without heroics

On-device models mean your messy draft, client PDF, or sensitive call audio stays where it started. New control panels clearly label what is processed locally and what leaves the machine, with per-app toggles. For many teams, this alone unblocks pilot projects that stalled on privacy reviews.

A buyer’s checklist (read before you click “add to cart”)

- NPU throughput: Treat TOPS like you treat cores—useful but only with mature drivers and app support.

- Memory headroom: 16 GB is workable; 32 GB keeps multi-app timelines and note search breathing.

- Thermals matter: A real vapor chamber beats thin-for-thin’s-sake. Sustained performance > peak numbers.

- App ecosystem: Verify your editor, notes app, and NLE list on-device modes—not just generic “AI” badges.

One-hour setup that pays back immediately

- Update OS & drivers: Enable the on-device model pack and NPU runtime.

- Wire three apps: Meeting tool (local transcript), notes app (local search/summarize), video editor (NPU effects).

- Automate two loops: “Record → summarize → task list” and “Screenshot → OCR → file to project”.

- Measure for a week: Track minutes saved on three repeat chores. If numbers stall, switch integrations or prompts.

Common pitfalls (and quick fixes)

- Battery surprise: Long photo batches still draw power. Use “plugged-in only” profiles for heavy queues.

- Duplicate models: Some apps ship their own runtimes. Consolidate to the OS-level pack to avoid bloat.

- Artifact hunts: For captions and audio cleanup, set confidence thresholds and review only below-threshold clips.

Who benefits most right now

Creators juggling clips, social cuts, and transcripts; analysts who live in PDFs and slide decks; engineers who want fast, local context for small refactors. If your day is a mosaic of small tasks, AI PC adoption is the rare upgrade that buys back half-hours—quietly—every day.

A quick personal anecdote

Yesterday I rewrote a product note between gates with airport Wi-Fi cutting in and out. Local rewrite + tone adjust didn’t care. I boarded with a clean draft, action items pulled into my task list, and a cool chassis. That was the moment AI PC adoption stopped feeling like a slide deck and started feeling like time I got back.

Bottom line

The shift isn’t flashy; it’s cumulative. With AI PC adoption widening, your computer becomes a place where small, boring tasks finish themselves—privately, quickly, and without fanfare. The best tech disappears into the work. This is one of those weeks.

FoxDoo Technology

FoxDoo Technology