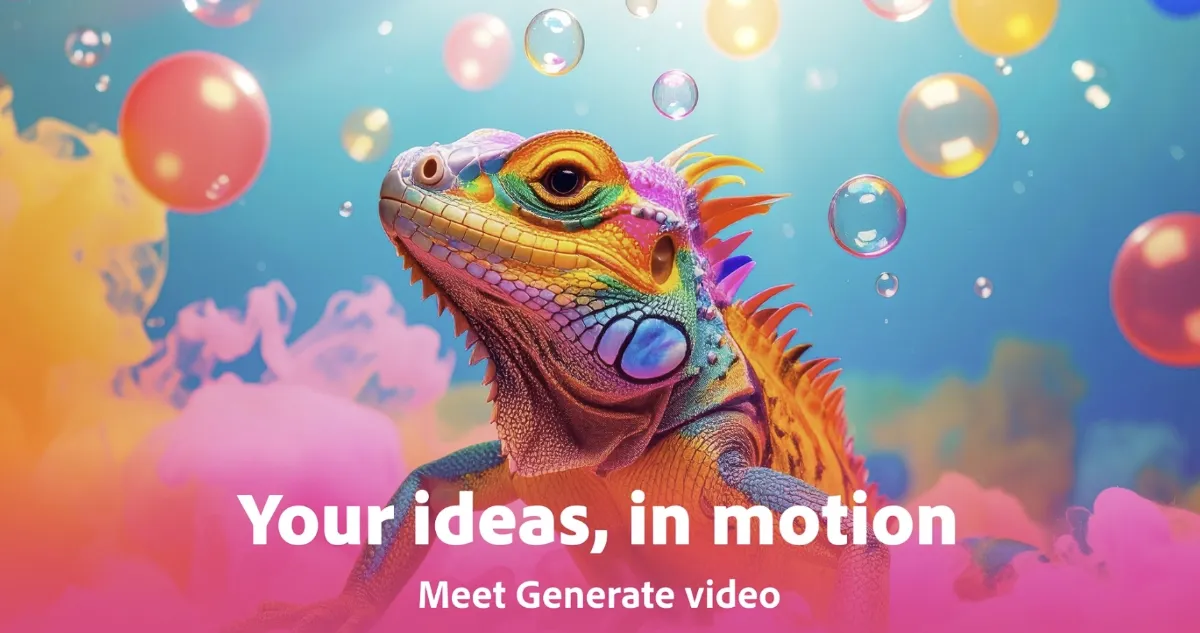

Today, July 20, 2025, Adobe officially unveiled Firefly 2, its most ambitious generative AI model yet, transforming simple text prompts into polished video clips in seconds. As someone who fiddles with video edits on weekends, I couldn’t help but dive into the beta as soon as it went live. Skipping the usual timeline of storyboarding and shooting, I typed “sunset over a lavender field with drifting lanterns,” hit Generate, and within moments had a dreamy 15-second sequence ready to refine.

Firefly 2 builds on its predecessor’s image-generation strengths, layering on a high-performance video engine that handles motion, continuity, and style in one package. The model can track objects across frames, maintain consistent lighting, and even apply cinematic camera moves like dolly-ins and pans. I was particularly impressed by the built-in “Style Transfer” feature: after generating my lavender scene, I switched to a “Noir Film” preset and suddenly had moody black-and-white footage with subtle film grain—all without leaving the Firefly interface.

Behind the scenes, Firefly 2 runs on a hybrid cloud architecture. Lightweight inference happens locally for quick previews, keeping latency below 500 milliseconds, while heavier render passes leverage Adobe’s GPU clusters for final output. This split-mode workflow means hobbyists can iterate on a laptop, then export high-resolution 4K clips in minutes. In my test, a two-minute sequence took under five minutes to render at full resolution—a fraction of the time compared to traditional NLE pipelines.

Interactivity got a boost too. Firefly 2 introduces “Video Layers,” letting you generate background, foreground, and overlay elements separately. I created floating text animations for a client promo by writing “gold metallic title fade-in at frame 10,” and Firefly 2 generated a perfectly timed 3D text clip I could drop over any footage. No complex keyframing required.

Adobe hasn’t forgotten collaboration. Firefly 2 projects live sync to Creative Cloud Libraries, so teams worldwide can iterate on the same video in real time. I shared my lavender demo with a colleague in Tokyo, and she tweaked the color grading from her browser, while I adjusted the motion speed from my studio. It felt like Google Docs—but for videos.

Content safety is baked in. Firefly 2 includes a “Community Guidelines” filter that automatically blocks harmful or copyrighted prompts. When I tried a trademarked movie title, the system politely nudged me to rephrase. For sensitive use cases—like medical animations—Adobe offers an “Enterprise Safe Mode” that routes processing through isolated, audited environments.

Firefly 2 also brings audio generation into the mix. You can type a mood or brief dialogue cue—“whispering voiceover: welcome to the future”—and Firefly 2 crafts an AI voice track complete with ambient sfx. In my demo, I layered a calm, cinematic narrator over my lavender field clip, creating a short branded teaser ready for social media.

Pricing follows Adobe’s Creative Cloud model. Firefly 2 features are included in the All-Apps plan at no extra cost, with a pay-as-you-go option for occasional users. Early adopters in Adobe’s beta program rave about productivity gains—one indie filmmaker reported cutting pre-production time by 60%. For anyone juggling tight deadlines and small budgets, that’s a game-changer.

Looking ahead, Adobe plans to integrate Firefly 2 into Premiere Pro and After Effects by year-end, enabling one-click AI assistants inside familiar editing timelines. Imagine generating b-roll, animating lower thirds, or fixing shaky footage with a text prompt. It’s clear Adobe’s vision is to make generative AI the backbone of every creative workflow, not just a novelty.

All in all, Firefly 2 feels like a glimpse into the future of video: an era where the only limit is your imagination. If you’ve ever wished you could conjure cinematic sequences with a few keystrokes, now’s your chance to jump in and play with the AI tools redefining content creation.

FoxDoo Technology

FoxDoo Technology