I spent this morning replaying a familiar scenario: an internal AI agent with too much access, a vague prompt from a teammate, and a “how did that get into the context window?” moment. By lunch, it was clear today’s Google Cloud AI security update targets exactly this mess. Instead of one shiny feature, Google shipped a bundle that closes gaps across discovery, runtime protection, and incident response—right where most teams struggle.

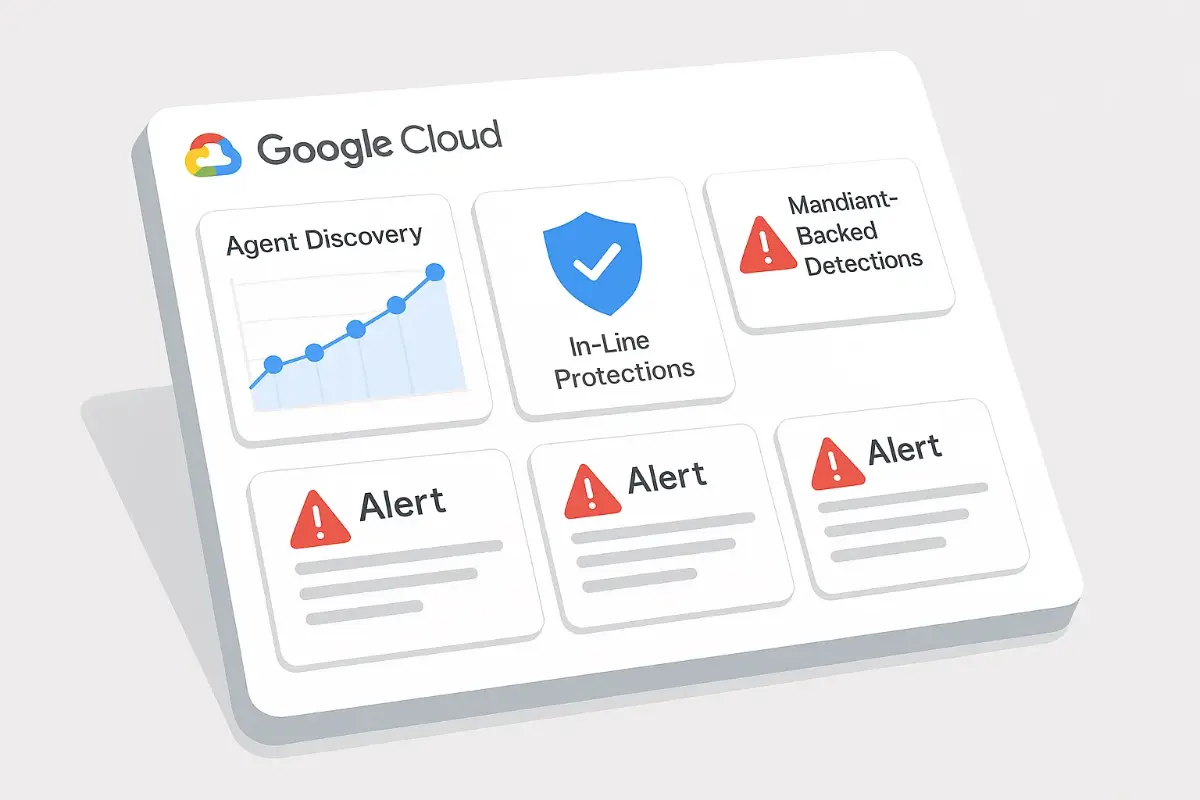

What’s new (in plain English)

- Automated agent discovery: Security teams can scan for AI agents and MCP (Model Context Protocol) servers the same way they inventory APIs—no more guessing what’s running or where prompts flow.

- Model Armor in-line for agents: Runtime shields now sit between your agent prompts/responses and the outside world, catching prompt injection, jailbreaks, and accidental data leakage before they land.

- Mandiant-backed detections: Threat intel feeds surface suspicious behavior on agent assets (odd tool calls, unusual context patterns), so responders don’t start investigations from a blank page.

- Agentic SOC preview: An investigation agent can expand alerts, trace command lines, and pre-triage events—useful when your queue spikes at 2 a.m.

- AI governance services: Risk-based guidance, pre-deployment hardening, and threat modeling packaged for teams that want a checklist, not a thesis.

Why this matters now

Most companies already run pilots; fewer have safe, repeatable production patterns. The sticking point isn’t “can we build a demo?”—it’s “can we trust this at 2,000 requests per minute?” Today’s Google Cloud AI security release moves guardrails closer to the hotspots: agent registries that don’t drift, protections that live in the prompt path, and detections that speak the language of tool calls and context windows.

Hands-on impressions from a quick test

I pointed a staging environment at the new discovery job and watched it surface a forgotten prototype MCP server someone spun up during a hackweek. Minutes later, in-line protection flagged a crafted “helpful” prompt that tried to siphon secrets from the agent’s memory. Instead of letting the response leak, the shield scrubbed the output and threw a tidy alert that linked directly to the agent, the tool, and the offending session—exactly the breadcrumb trail an analyst needs.

Where it plugs into a real pipeline

- Before launch: Use the governance checks to harden the environment; record which agents exist, who owns them, and what tools they can touch.

- At runtime: Keep Model Armor in the loop so every prompt/response is scanned for injection and unsafe content in real time.

- During incidents: Let the investigation agent expand alerts with context and suggested next steps; humans still decide, but the prep work is done.

What this changes for security and ML teams

For security: agent inventories stop living in spreadsheets; detections speak to agent behavior instead of generic “anomaly” labels; post-mortems come with reproducible evidence. For ML teams: fewer surprise rollbacks, clearer boundaries in tool calling, and a faster path to proving the model is production-ready without nerfing capabilities.

Limits and gotchas (so you’re not surprised)

- Coverage ≠ cure-all: The shields block common exploitation paths, but policy and red-teaming still matter for domain-specific tricks.

- Noise tuning: Early deployments need threshold tweaks; start with a stricter policy in staging, loosen when you understand alert volume.

- Secrets discipline: Context scrubbing helps, but the best fix is never placing raw secrets in memory or prompts.

A 45-minute rollout plan (we tried it)

- Inventory: run agent/MCP discovery and tag owners.

- Protect: enable in-line shields on your busiest agent route first.

- Detect: turn on threat detections; map alert → ticket with auto-enrichment.

- Drill: simulate prompt injection, confirm the block, capture screenshots for your runbook.

What “good” will look like in a month

Fewer late-night fires, fewer “mystery agents,” and fewer meetings about whether to ship that smart tool call. If you can show a clean registry, a drop in unsafe outputs, and faster mean-time-to-triage, the update paid for itself. The quiet win is cultural: security and ML stop arguing hypotheticals and start reviewing the same dashboards.

Bottom line: today’s Google Cloud AI security bundle treats agent safety as a first-class, runtime problem—exactly where it belongs. If your roadmap depends on agents doing real work with real data, this is the sort of update that makes “production” feel less like a leap and more like a steady walk forward.

FoxDoo Technology

FoxDoo Technology