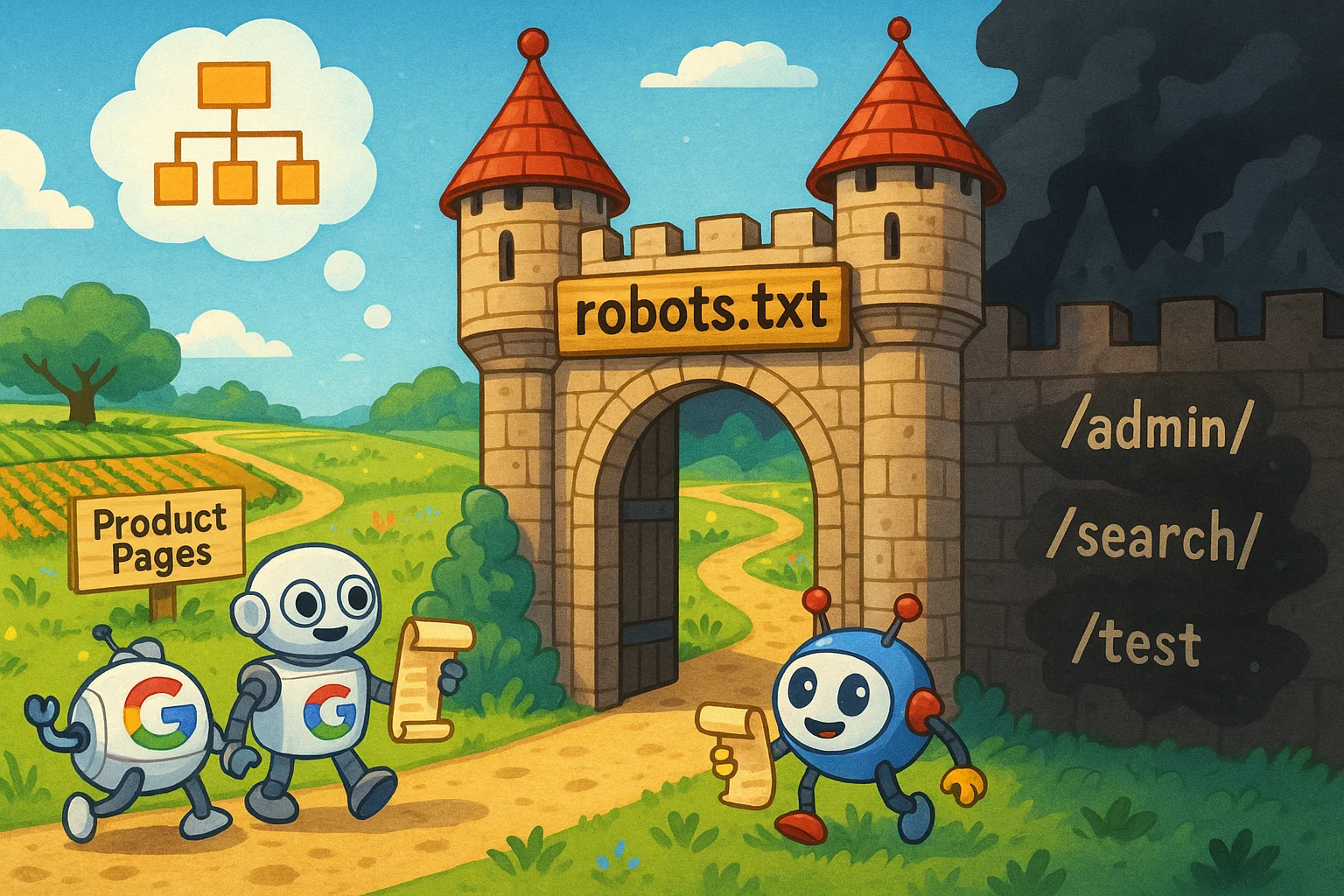

Search Engine Optimization (SEO) can feel like an ever-shifting labyrinth, but every site owner can master one fundamental file: robots.txt. Think of it as your website’s gatekeeper, directing search engine spiders where to crawl—and equally importantly—where not to crawl. A properly configured robots.txt ensures that Googlebot, Baiduspider, and their peers focus on your most valuable pages and ignore the low-value or sensitive ones. In this guide, we’ll walk through what robots.txt is, explore common rookie mistakes, reveal advanced tips to optimize crawl budget, and share practical check-and-fix methods to keep your file in top shape.

1. What Is robots.txt? The Digital Gatekeeper

At its core, robots.txt is a plain text file stored in the root directory (e.g., https://example.com/robots.txt) that instructs search engine spiders which parts of your website they can or cannot crawl. In everyday terms, imagine your site as a bustling shopping mall. Each search engine crawler (e.g., Googlebot, Baiduspider) is a delivery driver, and robots.txt is the map that tells them “which shops (pages) to visit and which warehouses (directories) to skip.” By clearly signaling high-priority areas (like blog posts or product pages) and hidden sections (like /admin/ or private testing zones), you guide bots to invest their limited “crawl budget” where it truly matters.

1.1 The Three Core Directives

- User-agentSpecifies the name of the crawler (e.g.,

User-agent: GooglebotorUser-agent: *for all bots). - Disallow / Allow

Disallow:tells crawlers which paths to avoid (e.g.,Disallow: /admin/).Allow:can override a broader disallow rule (e.g.,Allow: /admin/images/). - Sitemap

Sitemap: https://example.com/sitemap.xmlhelps bots find high-priority URLs.

Note: robots.txt blocks crawling, not indexing. Disallowed pages may still appear in search results if linked elsewhere.

2. Five Common Rookie Mistakes (and How to Avoid Them)

Even seasoned webmasters have tripped over simple robots.txt pitfalls. Let’s examine the top five blunders that new site managers often commit:

2.1 Placing the File in the Wrong Directory

- Error: Putting

robots.txtin/blog/instead of the root. - Consequence: Crawlers won’t see your rules, allowing full-site crawling.

- Fix: Upload it to the root directory (e.g.,

https://example.com/robots.txt).

2.2 Missing or Misplaced Slashes

- Error: Using

Disallow: /admininstead ofDisallow: /admin/. - Consequence: Subdirectories like

/admin/login/remain crawlable. - Fix: Use trailing slash to block entire directories.

2.3 Overusing Wildcards

- Error:

Disallow: /*?*blocks all parameter URLs. - Consequence: Blocks important dynamic pages unintentionally.

- Fix: Use targeted patterns (e.g.,

Disallow: /*?sort=*,Allow: /product?color=*).

2.4 Ignoring Multilingual Sites

- Error: One file tries to manage all subdomains.

- Consequence: Rules fail or block needed versions.

- Fix: Use separate robots.txt for each subdomain (e.g.,

en.example.com/robots.txt).

2.5 Forgetting Mobile Bot Rules

- Error: Omitting rules for

User-agent: Googlebot-Mobile. - Consequence: Hurts mobile-first indexing.

- Fix: Add separate mobile crawler directives.

3. Optimizing Crawl Budget: Advanced Strategies

Search engines allocate a finite crawl budget. Use robots.txt to direct bots away from low-value pages and prioritize important content.

3.1 What Is Crawl Budget?

The number of pages a search engine will crawl from your site over time. High-authority sites get more, but optimization matters for all.

3.2 Blocking Low-Value Pages

- Tag Pages:

Disallow: /tag/ - User Dashboard:

Disallow: /my-account/ - Outdated Promos:

Disallow: /sale/old-offer/

3.3 Handling Dynamic URLs

- Problem: Session or tracking parameters create duplicate content.

- Solution: Block broad parameters but allow important tracking ones (e.g.,

Disallow: /*?*,Allow: /*?utm_*).

3.4 Leveraging Sitemaps

Use the Sitemap: directive to highlight top pages. Combine it with Disallow: rules for precision crawling.

4. Tools and Manual Checks: Ensuring Your File Works

4.1 Google Search Console’s robots.txt Tester

- Access: Navigate to Settings > Crawl > robots.txt Tester

- Features: Conflict checks and crawl simulations.

- Tip: It highlights which directive blocked a URL.

4.2 Manual Verification

- Accessibility: Visit

https://example.com/robots.txtin browser. - Command-Line: Use curl to simulate a bot request.

4.3 Common Errors to Inspect

- File Not in Root: Returns 404

- Case Sensitivity: Must be lowercase and UTF-8 encoded.

5. Emergency Fixes: Recovering from a Misconfiguration

- Remove Faulty Rules: Delete incorrect

Disallow:lines. - Resubmit Sitemap: In Google Search Console.

- Check Coverage: Unblock critical pages flagged in “Excluded.”

6. Special Cases: Multilingual and Dynamic Sites

6.1 Multilingual Subdomains

Use a separate robots.txt per subdomain (e.g., example.com and zh.example.com).

6.2 Dynamic E-Commerce

Block filter/session parameters while allowing UTM tracking (e.g., Disallow: /*?order=, Allow: /*?utm_*).

7. Robots.txt and Sitemap: A Collaborative Duo

- robots.txt: Tells bots where not to go.

- Sitemap: Guides bots to priority pages.

8. Differences Between Google and Baidu Robots.txt Rules

Each search engine handles directives slightly differently (e.g., Allow: is not always respected by Baiduspider).

9. Preparing for the Future: Maintenance and Best Practices

- Use noindex: for truly hidden pages.

- Audit quarterly: for static sites.

- Audit monthly: for dynamic/eCommerce platforms.

- Block precisely: Avoid broad disallows that hide key functionality.

10. Three-Step Action Plan for Robots.txt Newcomers

- Initial Setup:

- Create

robots.txtin your site’s root. - Add:

User-agent: * Disallow: /admin/ Disallow: /search/ Sitemap: https://example.com/sitemap.xml - Verify it’s publicly accessible.

- Create

- First-Week Optimization:

- Block dynamic URL patterns and allow key ones.

- Add rules for multilingual subdomains.

- Test in Google Search Console.

- Ongoing Maintenance:

- Review coverage reports.

- Clean outdated directives.

- Update sitemaps regularly.

Conclusion

The robots.txt file is your SEO gatekeeper. Master it, avoid errors, and pair it with smart content and sitemap strategy for long-term success.

FoxDoo Technology

FoxDoo Technology