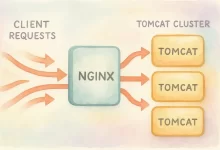

Let’s get real: downtime kills momentum. Nginx Tomcat Load Balancing is my not-so-secret sauce for keeping Java web apps humming when traffic spikes, bosses hover, and users click with reckless abandon. In this mega-guide I’ll walk you from a single lonely servlet container to a full-on cluster shielded by Nginx, complete with health checks, sticky sessions, SSL offload, and the performance tweaks that separate “it works” from “bring it on”. Why Bother? The Business Case for Nginx Tomcat Load Balancing Ten years ago I crashed a client demo because one Tomcat ran out of threads during a surprise customer click-fest. Ever since, I’ve sworn by Nginx Tomcat Load Balancing. It distributes load, adds failover, centralizes SSL, and lets you roll...

As the person responsible for our company’s IT infrastructure, one of my essential tasks was optimizing our web servers. After much research, testing, and consideration, Nginx emerged as the optimal solution due to its high performance, reliability, and flexibility. Understanding Nginx Architecture and Working Model One aspect that immediately attracted me to Nginx was its highly efficient architecture. Unlike Apache’s thread-based approach, Nginx uses a Master-Worker architecture. Master and Worker Processes The Master process manages global operations: reading configurations, launching worker processes, and overseeing their health. Each Worker process, operating independently, handles client requests using an event-driven model, efficiently managing multiple connections with minimal resource usage. Event-Driven Asynchronous Model Worker processes employ a non-blocking, event-driven model, ensuring quick request handling...

FoxDoo Technology

FoxDoo Technology FoxDoo Technology

FoxDoo Technology