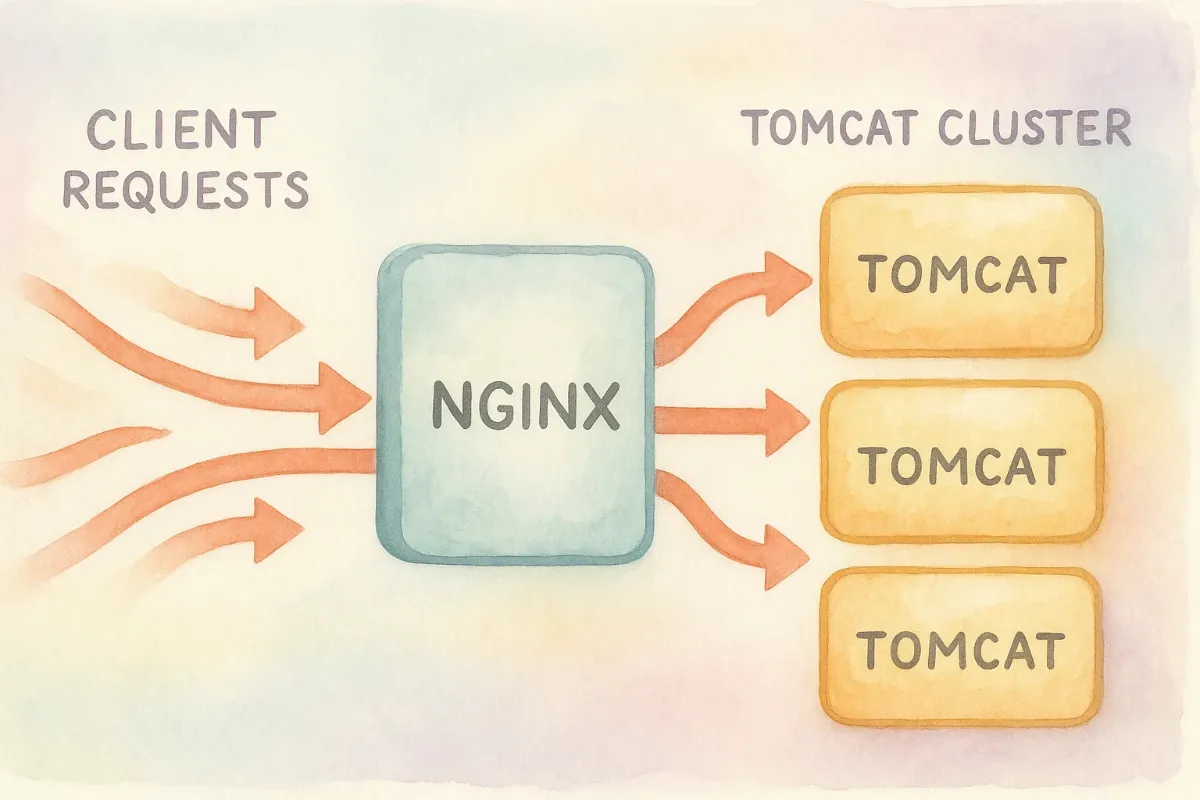

Let’s get real: downtime kills momentum. Nginx Tomcat Load Balancing is my not-so-secret sauce for keeping Java web apps humming when traffic spikes, bosses hover, and users click with reckless abandon. In this mega-guide I’ll walk you from a single lonely servlet container to a full-on cluster shielded by Nginx, complete with health checks, sticky sessions, SSL offload, and the performance tweaks that separate “it works” from “bring it on”.

Why Bother? The Business Case for Nginx Tomcat Load Balancing

Ten years ago I crashed a client demo because one Tomcat ran out of threads during a surprise customer click-fest. Ever since, I’ve sworn by Nginx Tomcat Load Balancing. It distributes load, adds failover, centralizes SSL, and lets you roll out zero-downtime updates—exactly what today’s impatient users and caffeine-fuelled product owners expect.

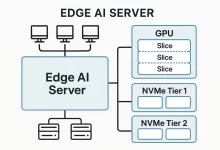

Architecture at 10 000 Feet

- Edge: Nginx listens on 80/443, terminates TLS, rewrites URLs, caches static bits.

- Upstream Pool: Multiple Tomcat instances (physical, VM, or container) running identical WARs.

- Shared State: Session replication or external session store (Redis, Hazelcast).

- Observability: Prometheus, Grafana, and good ol’ access logs.

Pre-Flight Checklist

Before typing the first sudo apt install, nail these basics:

- DNS or load-balancer VIP pointing at Nginx.

- Equal Java versions across Tomcat nodes.

- Matching

server.xmlports to avoid collisions. - SSH key-based access for quick rollout scripts.

Bonus: bookmark our deep-dive on vCenter Server automation—perfect if your cluster lives on VMware.

Installing the Tomcat Fleet

Grab the latest LTS tarball, extract to /opt/tomcat, create a dedicated tomcat user, and craft a systemd unit. Repeat on each node or bake into your Dockerfile. Pro tip: keep $CATALINA_BASE pointing at a versioned directory, symlink /opt/tomcat/latest, and you can flip versions in seconds.

Hardening Tips

- Change the default AJP and shutdown ports.

- Set

-Xms/-Xmxto identical values to skip JVM resizing pauses. - Enable the

HttpOnlyandSecureflags on cookies.

Installing Nginx: Package vs. Source

On Ubuntu:

sudo apt update

sudo apt install nginxNeed the bleeding edge? Compile with:

wget https://nginx.org/download/nginx-1.27.0.tar.gz

tar -xzvf nginx-1.27.0.tar.gz

cd nginx-1.27.0

./configure --with-stream --with-http_ssl_module

make -j$(nproc) && sudo make installThe stream module unlocks TCP load balancing should your Tomcat someday expose AJP or custom sockets.

Core Nginx Config for Nginx Tomcat Load Balancing

# /etc/nginx/conf.d/tomcat.conf

upstream tomcat_cluster {

least_conn;

server 10.0.0.11:8080 max_fails=3 fail_timeout=30s;

server 10.0.0.12:8080 max_fails=3 fail_timeout=30s;

server 10.0.0.13:8080 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://tomcat_cluster;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 2s;

proxy_read_timeout 30s;

}

}With just 15 lines, Nginx Tomcat Load Balancing is live. Hit F5 in your browser and watch requests dance between nodes.

Choosing a Load-Balancing Algorithm

| Directive | When to Use |

|---|---|

round_robin (default) |

General purpose, equal weight. |

least_conn |

Slow pages or long polls—you want the lightest server. |

ip_hash |

Quick-n-dirty sticky sessions without cookies. |

hash $request_uri |

Micro-cache shards or CDN origin balancing. |

Sticky Sessions the Clean Way

For serious e-commerce I prefer the sticky module. Example:

upstream tomcat_cluster {

zone tomcat 64k;

sticky cookie srv_id expires=1h path=/;

server 10.0.0.11:8080;

server 10.0.0.12:8080;

}The cookie survives across browser tabs; no more cart drop-offs. Inside Tomcat, add <Manager className="org.apache.catalina.ha.session.DeltaManager" /> for replication in case a node dies.

SSL/TLS Termination—Offload the Heavy Lifting

Let Nginx chew on ciphers while Tomcat focuses on business logic.

server {

listen 443 ssl http2;

ssl_certificate /etc/ssl/certs/fullchain.pem;

ssl_certificate_key /etc/ssl/private/privkey.pem;

ssl_session_cache shared:SSL:10m;

ssl_protocols TLSv1.2 TLSv1.3;

location / {

proxy_pass http://tomcat_cluster;

}

}Benchmarks confirm about a 15 % CPU win over terminating in each Tomcat. Numbers don’t lie.

Health Checks & Failover Logic

Nginx Tomcat Load Balancing shines when paired with active checks:

http {

upstream tomcat_cluster {

server 10.0.0.11:8080;

server 10.0.0.12:8080;

server 10.0.0.13:8080;

keepalive 64;

health_check interval=5 fails=2 passes=2 uri=/status;

}

}Expose /status in your app to return 200 only when DB connections are healthy. Node hiccups? Nginx yanks it from rotation within 10 s.

Performance Tuning: Tiny Tweaks, Big Wins

- worker_processes = auto (match CPU cores).

- worker_connections = 10 240 (or higher for WebSockets).

- Enable

tcp_nopushandtcp_nodelay. - Crank

proxy_buffersonly if responses >1 MB. - Pin JVM

Xms/Xmxand use G1GC with-XX:+UseStringDeduplication.

Monitoring Your New Beast

Flip on stub_status and scrape with Prometheus. Then install jmx_exporter for Tomcat metrics. My Grafana board lights up when the cluster hits 10 k rps—kinda like Christmas, but with more caffeine.

Common Pitfalls (and Quick Fixes)

- 404 after deploy: Clear browser cookies—old sticky route.

- WebSocket disconnects: Add

proxy_set_header Upgrade $http_upgrade;. - Huge file uploads stall: Increase

client_max_body_sizeand TomcatmaxPostSize. - Session split-brain: Double-check

jvmRoutematches Nginx cookie.

DevOps Workflow: CI/CD in Action

I push to GitHub, Jenkins builds a WAR, tags Docker, and ArgoCD rolls the image across Kubernetes pods fronted by an Ingress running—you guessed it—Nginx Tomcat Load Balancing. Zero downtime, zero excuses.

Security Hardening Checklist

- Enable

Content-Security-Policyheaders in Nginx. - Set

tomcat.userto a non-login account. - Rotate logs daily to avoid disk-fill DoS.

- Patch risks: subscribe to Tomcat security bulletins.

Personal Anecdote: The Night I Slept Through a DDoS

Last winter, a client’s SaaS got hammered by a 2 Gbps junk storm at 3 a.m. Nginx ate the flood, kept CPU at 15 %, and Tomcat threads barely blinked. I woke up to a Slack thread full of “all good, go back to sleep” messages. Best “incident” ever—and solid proof that Nginx Tomcat Load Balancing isn’t just techy vanity.

Further Reading & Next Steps

Brush up on firewall kung-fu with our Firewalld guide for Linux servers, then explore Nginx’s official upstream docs for deeper knobs. Your uptime stats will thank you.

Wrap-Up

From a single reverse proxy to a fully replicated servlet farm, you’ve seen how Nginx Tomcat Load Balancing delivers resilience, speed, and the peace of mind that comes from knowing your app won’t crumble under viral success. Set it up, stress-test it, and keep iterating. Your future self—and your users—will high-five you.

FoxDoo Technology

FoxDoo Technology