Three winters ago I got paged at 2:17 a.m. A demo cluster for an investor run-through was dropping frames. The culprit? A “temporary” test rig doing double duty as an AI server for video captioning and a grab bag of side projects. My eyes were sand; the wattmeter was screaming. The fix wasn’t a tweet, it was a rebuild—honest power math, sane storage, real cooling, and a scheduler that didn’t panic when a job went sideways. This guide is everything I’ve learned since: a no‑hype, hands‑dirty map to spec, wire, and run an AI server that stays fast after midnight.

Why “AI Server” Is Its Own Species

Call it what it is: a race car with a mortgage. A real AI server pulls more power, moves more data, and hates guessing. Classic web stacks tolerate jitter; AI pipelines don’t. You need predictable token throughput and stable thermals, not wishful thinking. That means planning around four constraints—power, cooling, data path, and scheduling—and then shaping everything else to serve those four.

- Power: GPUs spike. Your branch circuit doesn’t care about your deadline. Budget for peaks, not averages.

- Cooling: Air can do a lot—until it can’t. When fans sound like a leaf blower, you’re losing.

- Data path: Tokens and tensors are hungry. NVMe placement matters more than pretty dashboards.

- Scheduling: Jobs collide. Respect the queue, reserve the right resources, and preemption stops being scary.

Spec the Box: CPU, GPU, Memory, and the Boring Stuff That Saves You

Every component is a trade. Don’t buy parts—buy balance. An AI server rarely chokes because one part is slow; it chokes because the whole pipeline is mismatched.

CPU & Sockets

You need lanes and memory channels, not just cores. Pick a platform that gives your GPUs the PCIe bandwidth they deserve. If your inference stack spends time tokenizing, do yourself a favor and enable CPU AVX/AMX paths to keep GPUs focused on the heavy mats.

GPU Layout & Topology

Train? Chase high‑bandwidth interconnects and uniform links. Inference? Favor more smaller cards if your model shards neatly. Either way, map the topology. Jobs that cross sockets and bridges without reason are how you lose 15% and blame the compiler.

Memory (DDR & Beyond)

For the host, capacity covers the worst day; bandwidth covers every day. If you’re testing pooled or extended memory tech, be honest about latency. It helps when the working set of your pre/post‑processing fits in local RAM; the AI server shouldn’t spend the afternoon paging.

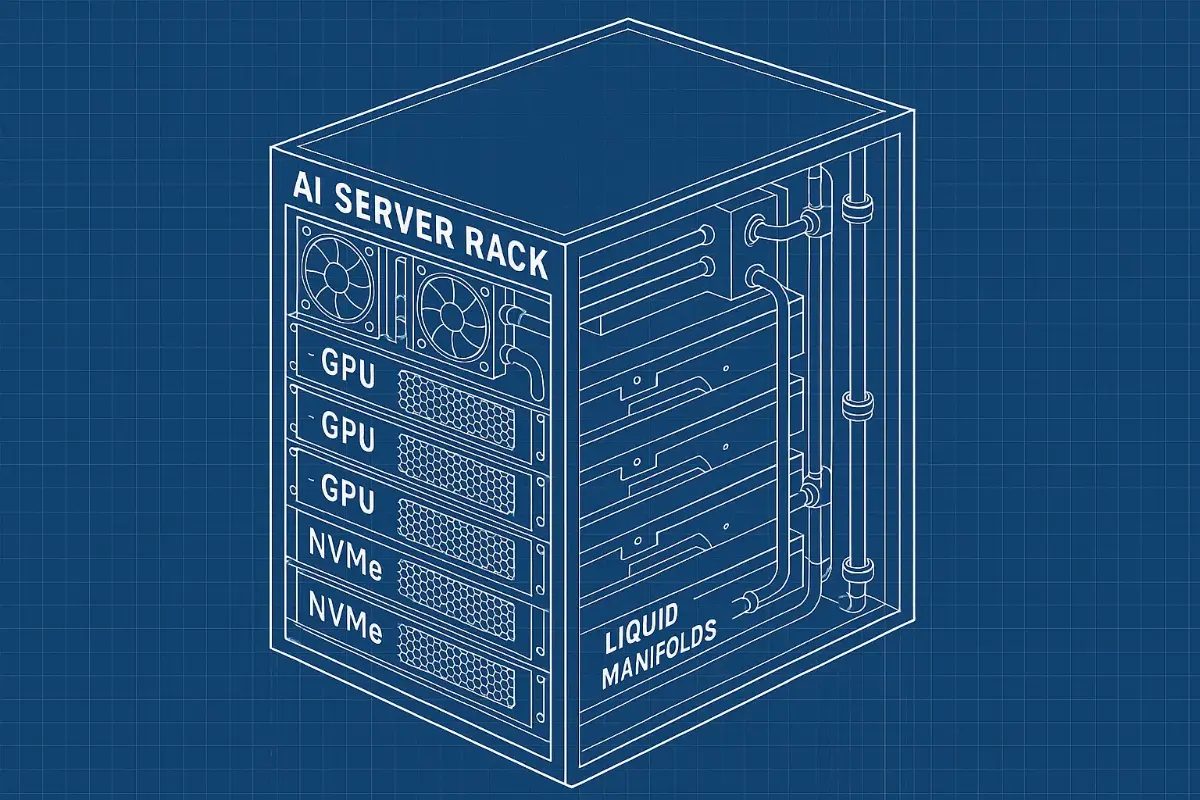

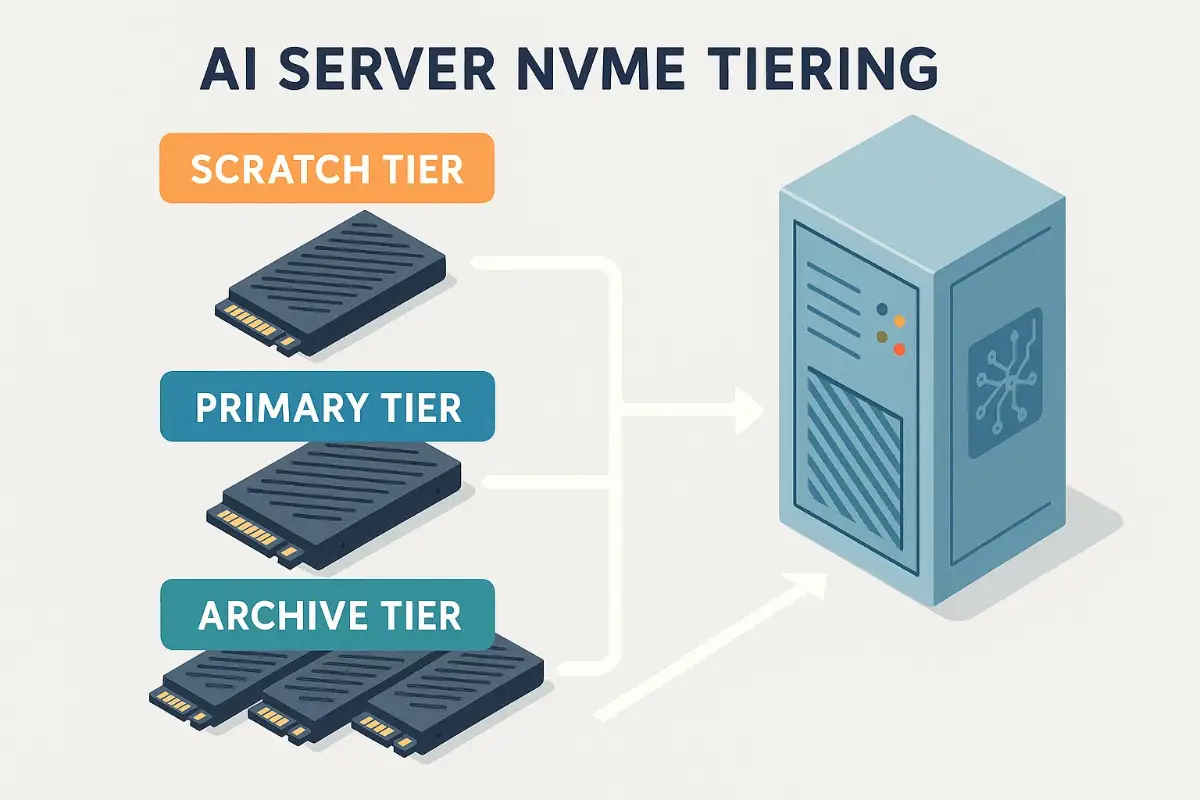

Storage (Tiered on Purpose)

- Tier 0 – NVMe scratch: fast, short‑lived, sized for the biggest batch of the day.

- Tier 1 – Primary NVMe: datasets, model weights, embeddings.

- Tier 2 – Bulk: big, cheap, slow—good for archives and checkpoints.

If you’re new to redundancy, read a friendly primer first and bookmark it. I like this clear, practical explainer on levels and trade‑offs: RAID Levels Explained: 12 Game‑Changing Truths for Rock‑Solid Storage. When you’re done, set up backups like you mean it. This step‑by‑step guide is a lifesaver for small teams: Automated MySQL Backup – 11 Awesome Power Hacks.

Power Budgeting: No More Tripping Breakers

Here’s the quick ritual that tamed my chaos.

- Measure idle and sustained draw with a clamp meter or smart PDU.

- Load GPUs to expected duty cycle (training vs. bursty inference are different creatures).

- Record p95 and p99 watts over a full job. Size to p99. Spikes are real.

- Leave headroom for firmware updates and fan curves. They drift.

| Component | Typical (W) | Peak (W) | Notes |

|---|---|---|---|

| CPU (1 socket) | 140 | 280 | Turbo adds up |

| GPU (high‑end) | 400–600 | 600–700 | Per card—multiply honestly |

| NVMe (per drive) | 5–12 | 15 | Heavy writes spike |

| Fans & pumps | 30–80 | 100+ | Scales with heat |

Pro tip: stagger boot and warm‑up. I once watched a brand‑new AI server brown out because every card ramped simultaneously while the OS rebuilt caches. It’s a gut‑punch you only need once.

Cooling: Air, Liquid, and Don’t‑Fry Fridays

Air works until delta‑T laughs at you. If you’re staying on air, keep filters clean, use blanking panels, and don’t cram cables into the front intake path. When leaf blowers become the office soundtrack, start testing liquid. Quick‑disconnect manifolds used to scare me; now they’re how I keep the room below sauna temps. Either way, instrument everything—intake, exhaust, liquid in/out, hot spots near the NICs and NVMe.

Good news: a well‑cooled AI server isn’t just quieter; it’s faster. GPUs throttle gently at first. You’ll chalk it up to “bad luck” until you log thermal ceilings and see the pattern.

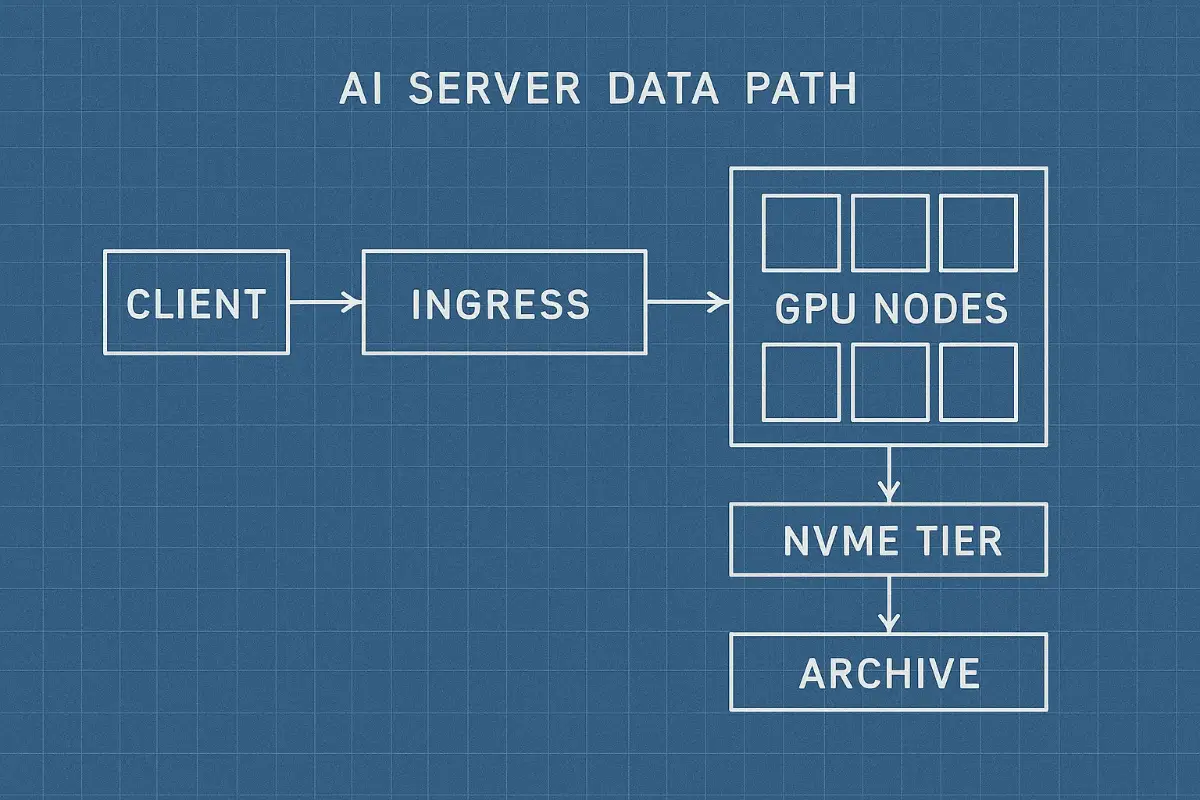

Data Path: Where Your Tokens Actually Go

Most lag isn’t math; it’s waiting. Your pipeline probably looks like this:

Ingress → Preprocess → Tokenize → Weights fetch → Inference → Postprocess → PersistMap the real bytes. That’s when you notice the tokenizer hitting a cold path on the CPU, or a model shard living three milliseconds farther across the fabric than it should. Upgrade what moves data, not just what does math. Inference workloads hate surprise hops.

Operating System & Filesystems: Keep It Boring, Keep It Fast

Pick a server‑grade distro you actually know—Ubuntu Server LTS or Rocky/Alma are fine. Keep kernel and drivers pinned to a known‑good set. For filesystems, ZFS brings snapshots and checksums; XFS is wonderfully predictable; ext4 is the Toyota Corolla of filesystems—unflashy, but it will get you home. If you go ZFS for datasets and checkpoints, give it RAM and a mirrored SLOG if you care about sync writes.

Networking: Packets with a Purpose

A hungry AI server makes a network honest. Your design falls apart when you treat the NIC like an afterthought. For east‑west traffic, favor stable, well‑documented drivers, and monitor buffer health during heavy inference windows. If you’re evaluating SmartNICs or DPUs to offload TLS, storage, or overlay headaches, test them with your ugliest traffic first. The best demo is the one that doesn’t crash during peak.

Storage Recipes That Don’t Bite Back

Local NVMe, Cleanly Laid Out

# Example: create three NVMe namespaces for clean separation nvme create-ns /dev/nvme0 --nsze=488378646 --ncap=488378646 --flbas=0 nvme attach-ns /dev/nvme0 --namespace-id=1 --controller-id=0x1 mkfs.xfs /dev/nvme0n1 mount -o noatime,discard /dev/nvme0n1 /data/modelsZFS for Snapshots & Checks

zpool create -o ashift=12 mlpool mirror /dev/nvme0n1 /dev/nvme1n1 zfs set atime=off compression=zstd mlpool zfs create mlpool/weights zfs create mlpool/checkpoints zfs snapshot -r mlpool@nightlySecurity: Reduce Blast Radius by Default

- Run jobs as non‑root. Always.

- Lock model artifacts behind read‑only mounts in prod.

- Rotate credentials and machine tokens on a schedule, not vibes.

- Segment admin interfaces and BMC/IPMI onto a management VLAN. No exceptions.

People are creative; a bored intern is basically a penetration test. Your AI server deserves gates and guardrails like anything else holding customer data.

Schedulers & Runtimes: Make the Queue Your Friend

You don’t need a perfect cluster to benefit from a scheduler. Even one AI server with two GPUs can use a queue.

Kubernetes GPU Request (Basic)

apiVersion: v1 kind: Pod metadata: name: summarizer spec: restartPolicy: OnFailure containers: - name: app image: registry.local/summarizer:stable resources: limits: nvidia.com/gpu: 1 volumeMounts: - name: weights mountPath: /models volumes: - name: weights persistentVolumeClaim: claimName: weights-pvcSlurm Job (Simple)

#SBATCH -J chunk-translate #SBATCH -N 1 #SBATCH --gpus=2 #SBATCH --time=02:00:00 #SBATCH --mem=128G srun python translate.py --in data/batch.parquet --out out/Observability: Evidence or It Didn’t Happen

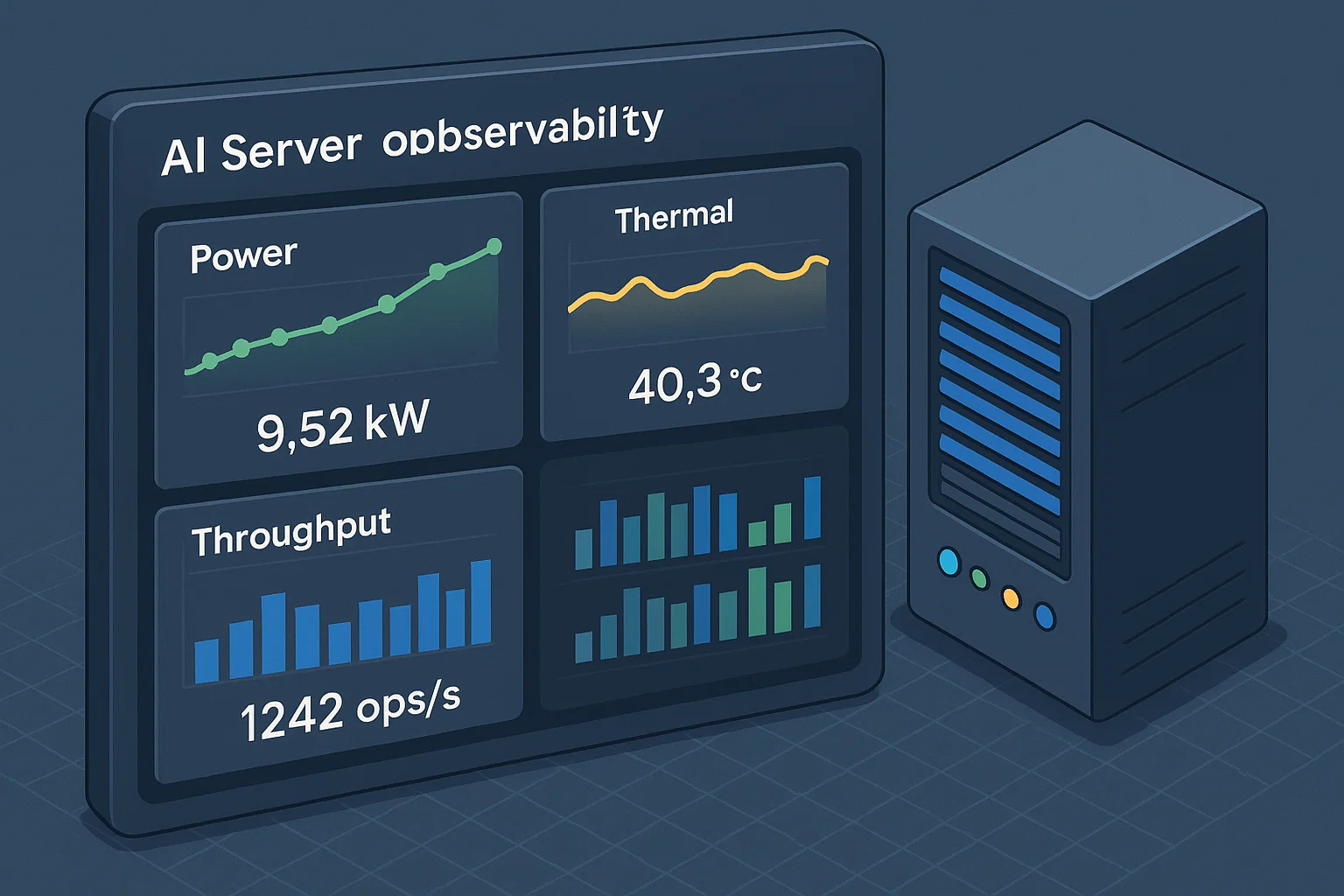

Keep three dashboards: throughput, latency, and “is the box on fire?” Add alerts for GPU throttle, PDU near‑trip, and NVMe queue depth. I like one page that tells me, at a glance, whether the AI server is compute‑bound, IO‑bound, or heat‑bound. That alone kills most wild goose chases.

# quick-and-dirty sanity loop watch -n 1 "\ nvidia-smi --query-gpu=utilization.gpu,temperature.gpu,power.draw --format=csv,noheader; iostat -x 1 2 | tail -n +7 | head -n 5; ipmitool sdr | egrep 'Inlet|Exhaust|CPU|Fan' "Deployment Pipelines: From Laptop to Rack Without Tears

Containerize where it helps, not because a blog said so. Pin exact CUDA/cuDNN versions in images and build them from a Dockerfile you own.

FROM nvidia/cuda:12.4.1-cudnn-runtime-ubuntu22.04 RUN apt-get update && apt-get install -y python3-pip libssl-dev COPY requirements.txt . RUN pip3 install -r requirements.txt COPY . /app ENV TORCH_CUDA_ARCH_LIST="8.0;8.6;9.0" CMD ["python3","/app/server.py"]Cost Truths: The Bill Comes From Four Places

- Capex: chassis, GPUs, CPU, NVMe, RAM, NICs, PDUs.

- Power: keep a running kWh tally.

- Cooling: fans, pumps, and HVAC hours matter.

- People: every 3 a.m. fix is money. Fewer surprises = fewer weekends lost.

A quiet AI server is cheaper than a “fast” one that spends hours throttled and rebooting. You pay either way. Pay once.

Migration Playbook: What to Move First

Don’t forklift the whole shop. Move one pipeline: preprocess tokens, run a mid‑sized model, write results, measure. Bring bigger models next. Your AI server learns your traffic pattern as you learn its quirks.

Edge vs. Core: Where the Box Lives

Edge AI server builds look lean: fewer GPUs, smaller NVMe tiers, and ruthless battery/UPS planning. Core builds chase absolute throughput and dense cooling. Test deployments in the dumbest possible environment (noisy branch office, dusty closet) to learn what fails fast.

Data Hygiene: Version Everything That Matters

- Data schema, tokenizer, prompts, and even pre‑/post‑code get versioned.

- Weights have immutable IDs. “Latest” is not an ID.

- Snapshots are cheap; vague memories are expensive.

Resilience: Fail Like an Adult

- Two PDUs on separate circuits. If one dies, the AI server should survive.

- Firmware updates in maintenance windows with a clear rollback.

- Nightly scrub for filesystems that support it; weekly test restores.

Cluster Tricks That Work on Day One

Even before you scale out, adopt habits that age well.

- Node labels for GPU memory size; stop guessing in schedulers.

- Priority classes for p95 SLAs; the urgent job wins on purpose.

- Resource quotas so one team can’t starve the rest.

Compression & Quantization: Speed Without Shame

Your best win might be a smaller model. Try quantization carefully—validate with real users, not just benchmarks. A model that responds in 120 ms beats a genius that needs a nap. The nicest compliment you can get is “it feels instant.”

My Short Anecdote: The “Mop Bucket” Save

During a retail pilot, a store manager kept a mop bucket next to the rack because the old gear overheated and the AC dripped. We dropped in a modest AI server, cleaned airflow, added a real filter, and tuned jobs to run during lull hours. The mop stayed—for nostalgia. The fan noise didn’t.

Runbook Snippets I Reuse Constantly

GPU Health Check

#!/usr/bin/env bash set -euo pipefail nvidia-smi --query-gpu=name,driver_version,pstate,power.draw,temperature.gpu,utilization.gpu --format=csv Disk Heat Map (Quick & Dirty)

for d in /dev/nvme*n1; do t=$(sudo smartctl -A $d | awk '/Temperature:/ {print $10}') echo "$d $t" done | sort -k2 -nTeam Workflow: Make It Feel Like a Teammate

People adopt what they understand. Post job plans in chat with resource asks (“2 × 80GB,” “NVMe scratch 200 GB”). Tag expected runtime. When a job finishes, attach graphs and the commit hash for the model weights. A humble AI server with great notes beats a massive one that hides its homework.

Tables You’ll Actually Reference

| Thing | Symptom | Likely Cause | First Fix |

|---|---|---|---|

| Tokens stall mid‑reply | Fan spike, temp rise | GPU throttle | Clean filters, lower batch, check pump |

| Throughput random dips | NVMe util spikes | Background writes | Move logs, add scratch drive |

| P95 latency drift | CPU pegged | Tokenizer hot path | Pin cores, pre‑tokenize |

| Odd OOMs | Kernel logs quiet | Fragmented VRAM | Restart process, re‑pack models |

Capacity Planning: The Honest Way

- Pick your target SLA (e.g., p95 < 250 ms for a given prompt size).

- Run a week of real traffic and record concurrency and burst windows.

- Size GPU VRAM to fit your biggest working set with 10–20% headroom.

- Size NVMe scratch to your largest batch’s temporary footprint × 1.5.

Capacity is a bet. With a calm AI server, it’s a smart one.

Change Management: Roll Forward on Purpose

- Shadow new models for a day. Compare outputs silently.

- Stage rollouts (5% → 25% → 100%). Keep a kill switch that actually kills.

- Publish a weekly “what changed” note: drivers, weights, configs.

When to Scale Out (Not Just Up)

If one AI server can’t keep p95 in bounds even with tiny batches and tidy models, stop adding cards to the same chassis. Spread the load. Many small failures are easier to survive than one spectacular one.

Two External Reads I Recommend

Want a feel for where the hardware winds are blowing? These digestible reads are worth your bookmarks:

- Tom’s Hardware — steady coverage of server GPUs, storage, and interconnect experiments.

- ServeTheHome — practical lab tests and rack‑level thinking that translate well to the real world.

Copy‑Paste: Minimal Inference Service (Python + FastAPI)

from fastapi import FastAPI import torch from transformers import AutoModelForCausalLM, AutoTokenizer

app = FastAPI()

tok = AutoTokenizer.from_pretrained("your/model")

model = AutoModelForCausalLM.from_pretrained("your/model").to("cuda").eval()

@app.post("/infer")

def infer(req: dict):

ids = tok.encode(req["text"], return_tensors="pt").to("cuda")

with torch.inference_mode():

out = model.generate(ids, max_new_tokens=64)

return {"text": tok.decode(out[0])}Disaster Drill: Practice the Pain

- Block a fan intake and watch your alerts. Do they fire fast enough?

- Yank a drive (on a safe array). Does the rebuild script do the right thing?

- Kill a process mid‑inference. Does the queue resubmit gracefully?

Final Checklist: Ship It, Sleep Better

- Power headroom >= p99 + 15%.

- Cooling curve validated at the hottest hour of the day.

- Storage tiers sized and labeled; scratch cleaned nightly.

- Scheduler enforces priorities; noisy neighbors can’t starve anyone.

- Observability proves health in one page.

- Backups restorable; firmware pinned; change log current.

When it all clicks, an AI server feels… boring. The good kind of boring. Jobs start on time, fans murmur instead of howl, and your Slack stays quiet while the cluster eats work like a metronome. That’s the sign you built the thing right—fast, safe, and honest about its limits.

FoxDoo Technology

FoxDoo Technology